Monitoring brand visibility used to be straightforward. You opened Google Search Console, checked impressions, clicks, branded queries, and landing pages, and you had a clear picture of how people found your company.

That model is no longer sufficient.

People’s behaviour has changed. Search went from a single-engine search dominated by Google for a better part of the last 25 years, to a multi-engine ecosystem that now includes ChatGPT, Perplexity, Gemini, and other LLMs with a role that can’t be ignored.

Users now get answers from systems that combine retrieval with model-generated text, update outputs frequently, and do not follow ranking logic. On a daily basis, users search/prompt:

1. Google: ~ 14 billion times

2. ChatGPT: ~ 2.5 billion times

This expansion has influenced the tracking of organic visibility, making it much harder - not just because there are more engines, but because LLMs operate fundamentally differently from Google.

This article focuses on how to track your brand across these AI systems, what data actually matters, and which new AI SEO KPIs companies should adopt to measure visibility in an environment where Google metrics alone no longer provide the full picture.

AI Search is not Google, which makes tracking AI visibility much more complex.

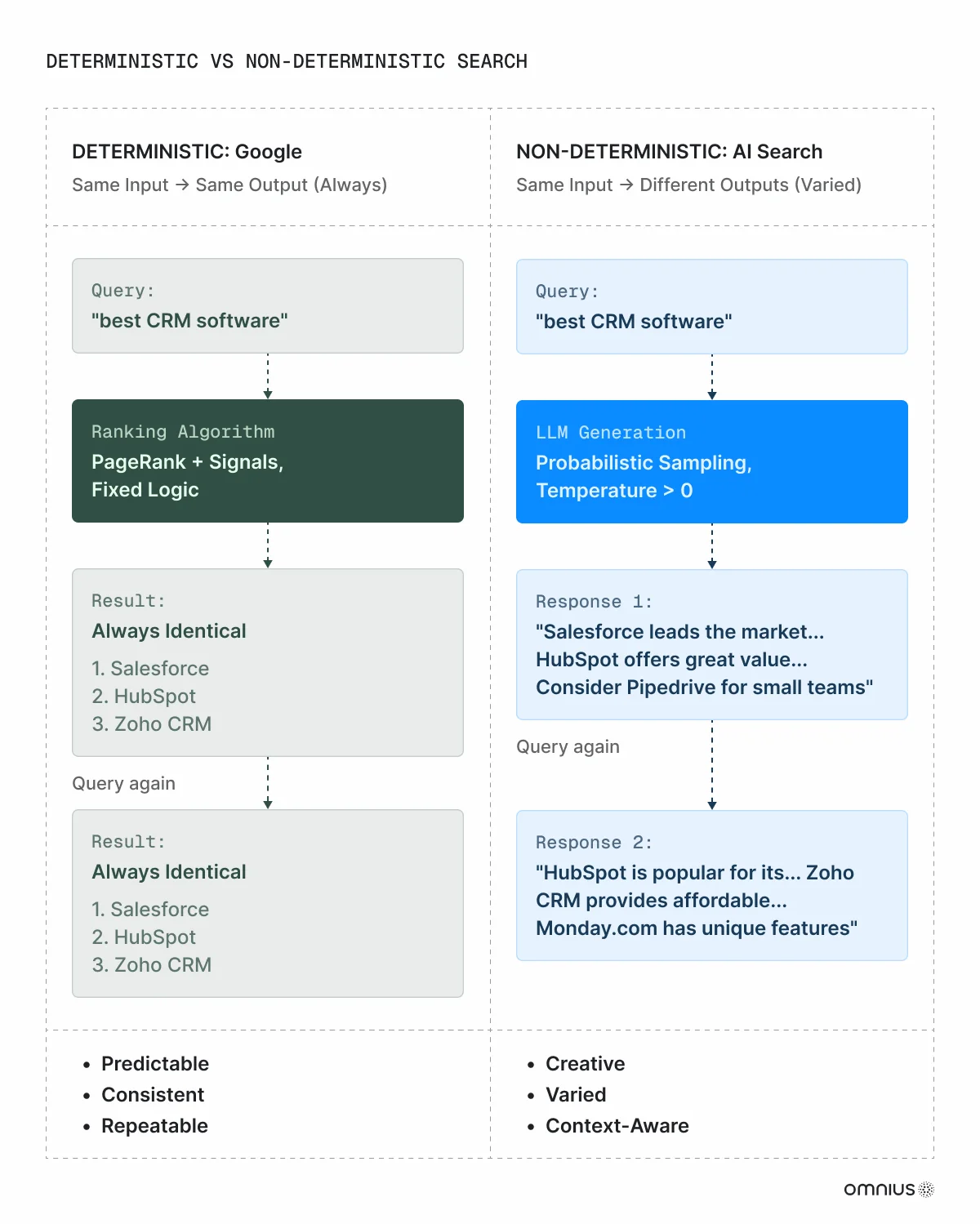

Google’s search results are largely deterministic: two users searching the same query will usually see the same ranked links, with only minor variations based on location or personalization.

AI search is non-deterministic. Responses are generated probabilistically, influenced by

- Prompt phrasing,

- Semantic nuance,

- Chat history,

- Model version,

- Retrieval mode,

- Temperature settings, and

- The user’s context.

The same question asked twice can produce different outputs.

Because of this, a single snapshot tells you almost nothing about your actual visibility. Tracking requires repeated runs, averaged outputs, prompt clustering, and comparisons across engines.

And this complexity scales fast.

Instead of one dominant engine, we now have multiple independent systems - ChatGPT, Gemini, Perplexity, Copilot, Claude, and region-specific LLMs, each with its own training data, retrieval logic, recency window, and citation behavior.

Visibility in one engine does not imply visibility in another.

The result is a measurement environment that behaves more like observational analytics than rank tracking. To understand how your brand appears across these systems, you need multi-run sampling, multi-model comparisons, domain-level citation mapping, and platform-level reporting.

Traditional SEO workflows only cover a small fraction of what’s happening.

AI search introduces variability, personalization, volatility, and prompt dependency - factors that make tracking significantly more complex than anything in the good old times of the Google-only era.

Why should you be tracking AI search?

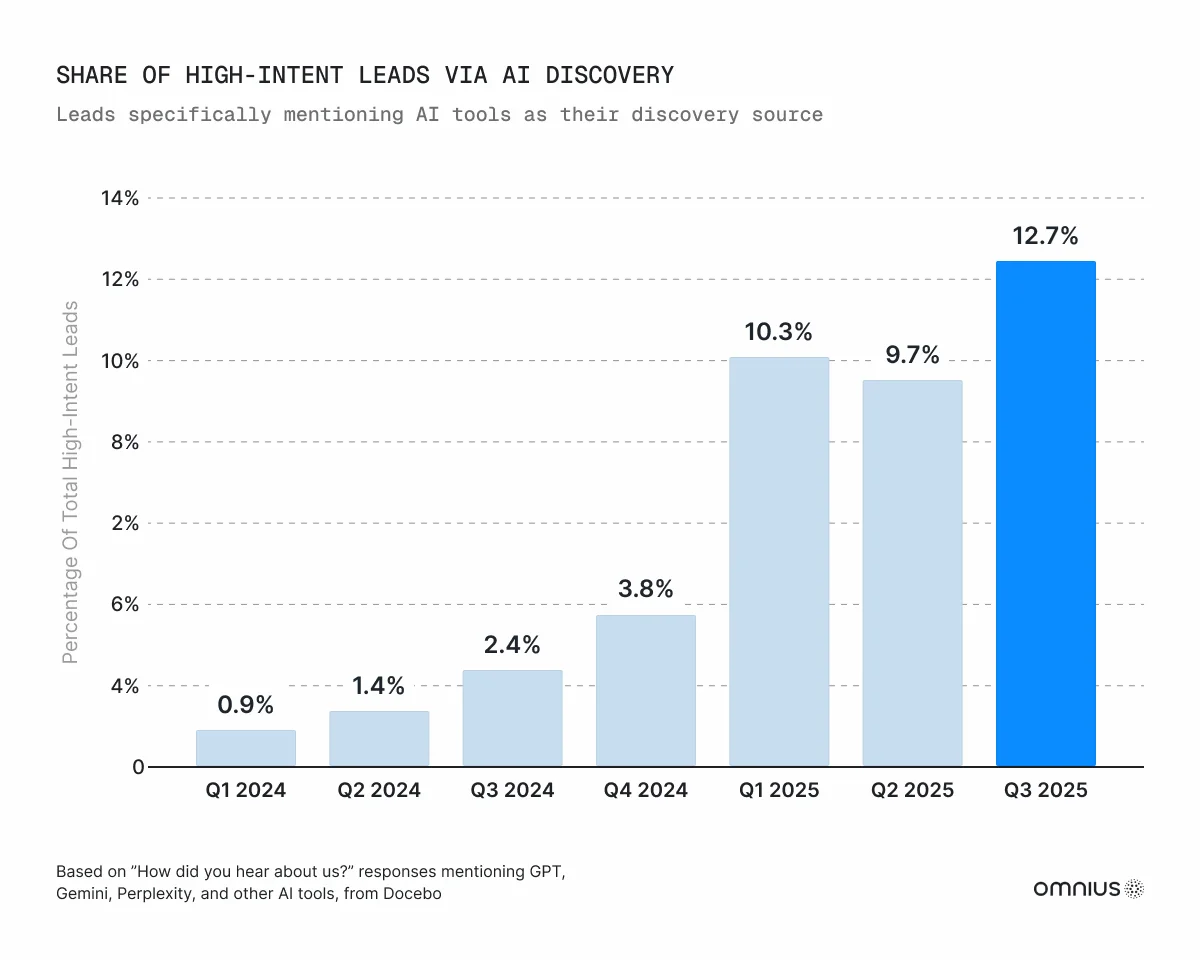

There is no need to debate whether AI search is growing. As mentioned earlier, ChatGPT alone processes more than 2.5 billion prompts per day, without counting Perplexity, Gemini, Copilot, Claude, or geo-specific models.

A measurable share of websites (63%) now receives traffic from AI search, and user adoption is exponentially increasing (±527% YoY). People coming from LLMs are also typically more informed and further down the funnel, which leads to higher conversion rates and stronger intent signals.

AI search is not replacing Google, but it is definitely becoming an unavoidable part of how people evaluate information, compare tools, and make purchase decisions; that’s no longer experimental.

And the AI search opportunity couldn’t be more obvious.

For companies, this creates a simple operational truth: you cannot improve what you cannot see. The only way to understand how your brand is represented across AI engines is to track them directly.

Why is tracking only Google no longer sufficient?

The problem is that most organisations still rely exclusively on Google Search Console and GA4 to understand visibility. This creates an incomplete picture.

Google’s data reflects deterministic ranking results, while AI engines operate on non-deterministic logic.

Outputs vary based on model version, recency window, retrieval depth, user context, conversation history, and prompt phrasing. Because of this, AI visibility requires more nuanced and more frequent measurement than Google provides.

There’s no 1-1 correlation between the success on Google & AI engines.

Multiple independent studies confirm this. For example, Ahrefs found that, on average, only 12% of links cited by assistants like ChatGPT, Gemini, and Copilot appear in Google’s top 10 for the same query, and that 28% of ChatGPT’s most-cited pages have zero organic visibility in Google.

Many companies that rank well in Google appear inconsistently or inaccurately inside LLM answers for reasons that traditional analytics cannot detect - outdated documentation, weak third-party authority, poor citation coverage, missing comparison content, or simply because the model’s recency filters exclude older materials.

This is why Google-only tracking is no longer sufficient. It cannot measure:

- Non-deterministic answer variability

- Model-to-model differences

- Citation patterns across engines

- Recency filters that suppress older brand content

- Prompt-dependent shifts in brand representation

Tracking AI search visibility requires a bit more complex approach: repeated runs, averaged outputs, prompt clustering, multi-engine comparisons, domain-level citation mapping, and platform-level reporting.

These elements cannot be replicated with Google Search Console, GA4, or standard BI dashboards.

To achieve objectivity in how your brand actually appears across AI systems, Google metrics must be supplemented with AI-specific tracking tools that reflect how modern engines work. Without this layer, organisations operate with incomplete visibility and risk, making decisions based on misleading or outdated information.

How AI Visibility tracking works

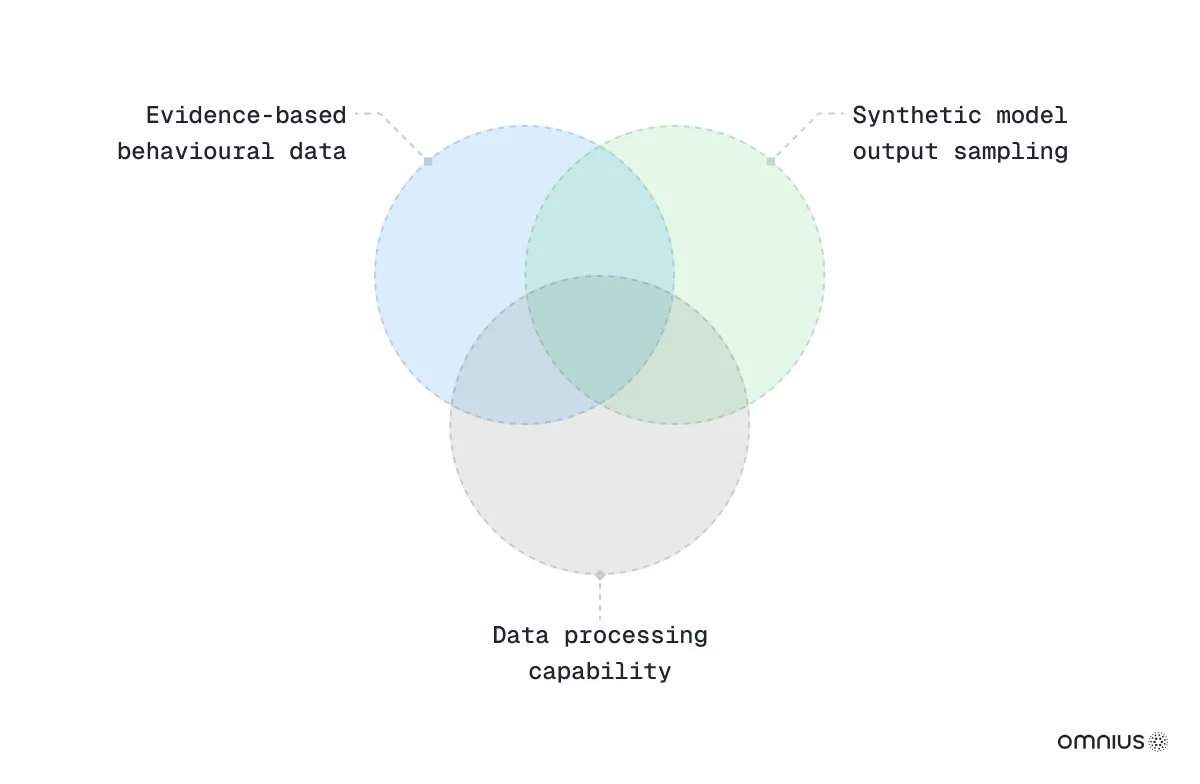

Before we start, we need to understand the foundation of how AI visibility tracking works in the backend. To measure brand visibility inside ChatGPT, Gemini, Perplexity, Copilot and other engines, tools rely on two core data sources:

- Evidence-based user behavior

- Synthetic model output sampling

Everything we see in dashboards, reports, or visibility scores is a product of how these two layers are collected, processed, and combined.

Evidence-based user behavior

This is the part most teams already know, but often underestimate when thinking about AI search. Analytics tools like GA4, GTM, server logs, Microsoft Clarity, and BI systems identify real traffic from AI engines by looking at referrers such as chat.openai.com, perplexity.ai, claude.ai, and others.

Evidence-based data shows what actually happened:

- where AI users landed

- how they behaved

- how fast they converted

- which pages generate the most AI-driven interest

- how AI visitors differ from organic or paid visitors

This layer is essential because it confirms real user behavior, not just what the models say.

Synthetic model output sampling

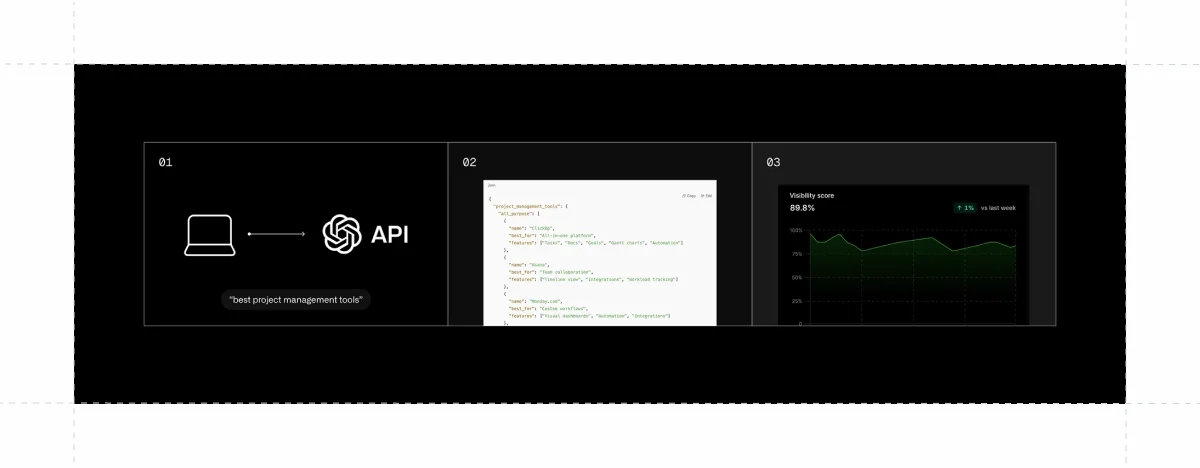

Behavioral data alone cannot explain how AI engines view a brand or why they choose to mention or exclude it. For that, visibility tools generate structured, controlled queries across ChatGPT, Gemini, Perplexity, Copilot, and other models.

These tools run hundreds or thousands of prompts that reflect real buyer behavior, such as:

- best [category] tools

- alternatives to [brand]

- [brand] vs [competitor]

- pricing, implementation, integrations, and market-fit queries

Because LLMs are non-deterministic, a single answer is not reliable. The same query can produce different outputs depending on recency, model version, retrieval depth, user context, conversation history or even minor wording differences. This is why proper AI visibility tracking requires:

- multiple runs of every prompt

- prompt variations

- model-to-model comparisons

- region and version sampling

- citation extraction and clustering

- parsing brand mentions and competitor mentions into structured data

- visibility scoring across engines and prompt clusters

Synthetic data shows how engines describe your brand, how often you appear, which pages they cite, and where competitors outperform you.

Objectivity is at the intersection

Evidence-based behavior and synthetic model output solve different parts of the AI visibility problem, and neither is reliable alone.

Evidence-based data shows the traffic, conversions, and behavior coming from AI engines, but it cannot explain why models mention or exclude your brand.

Synthetic sampling shows how engines position you, which prompts you to appear in, and where competitors dominate, but it does not confirm whether those mentions translate into meaningful outcomes.

You only get an objective “bigger picture” when both layers are combined, because this is what reveals where visibility is strong or weak, which gaps actually matter, and what needs to be fixed first.

Data processing becomes the third component.

Or better said, the time-to-value of it.

AI search results change fast. Recency affects what they cite, and answer structures shift as models update. The advantage now comes from how fast you can interpret the data, implement changes, and analyze the next sampling round.

Collecting data is easy; acting on it in a volatile ecosystem is the hard part.

When choosing how to track AI search, your stack should reflect all three pillars: synthetic model visibility, evidence-based user behavior, and the ability to process data fast enough.

Some teams build this by combining multiple tools. Others rely on a unified platform like Atomic AGI that integrates both data types and the processing layer. The principle is the same: without all three, you never get a complete or stable view of your brand’s true position across AI engines.

Once these three pillars are in place, the next step is to decide what you actually track. This is where a dedicated set of LLM-focused KPIs becomes essential.

New (AI) SEO KPIs you should start tracking

If you want your SEO strategy to actually reflect how discovery works in 2026, you need a KPI layer that covers AI search, not just Google. Below are the core metrics that are worth tracking and setting as explicit goals.

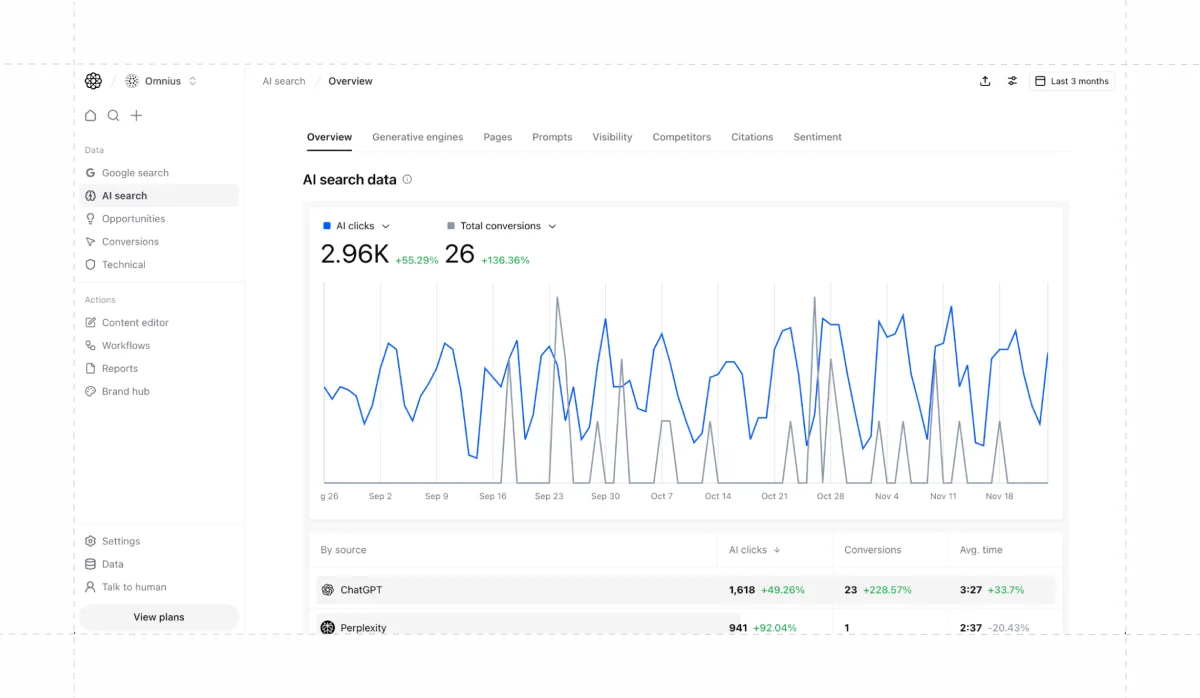

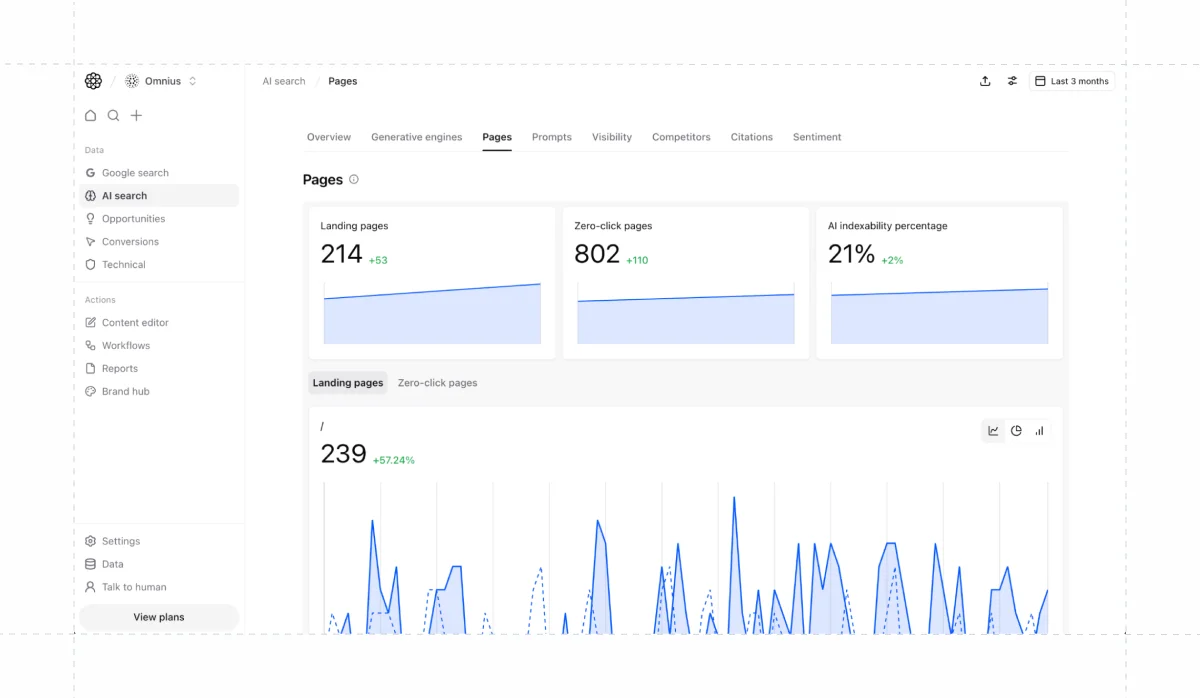

1. AI Visibility (Behavioral / Evidence-Based)

This is the closest equivalent to traditional SEO visibility, but isolated to AI engines. Instead of impressions and SERP positions, AI visibility is measured through real user behavior coming from AI platforms.

This includes:

- clicks coming from AI engines

- engaged sessions and scroll depth

- conversion performance (direct and assisted)

- landing page distribution for AI-referred traffic

How it is technically tracked:

- AI engines are added as a standalone acquisition channel

- Traffic is filtered using referral domains, network signatures, and device patterns.

- Sessions are grouped by engine to show which platforms drive meaningful downstream engagement.

- Conversion events, session depth, and funnel progression are compared against organic and direct.

- Assisted conversions are modeled using attribution logic to understand whether AI exposure increases branded search or direct traffic.

This metric shows the real-world effect of your AI visibility - the part that impacts revenue and user behavior.

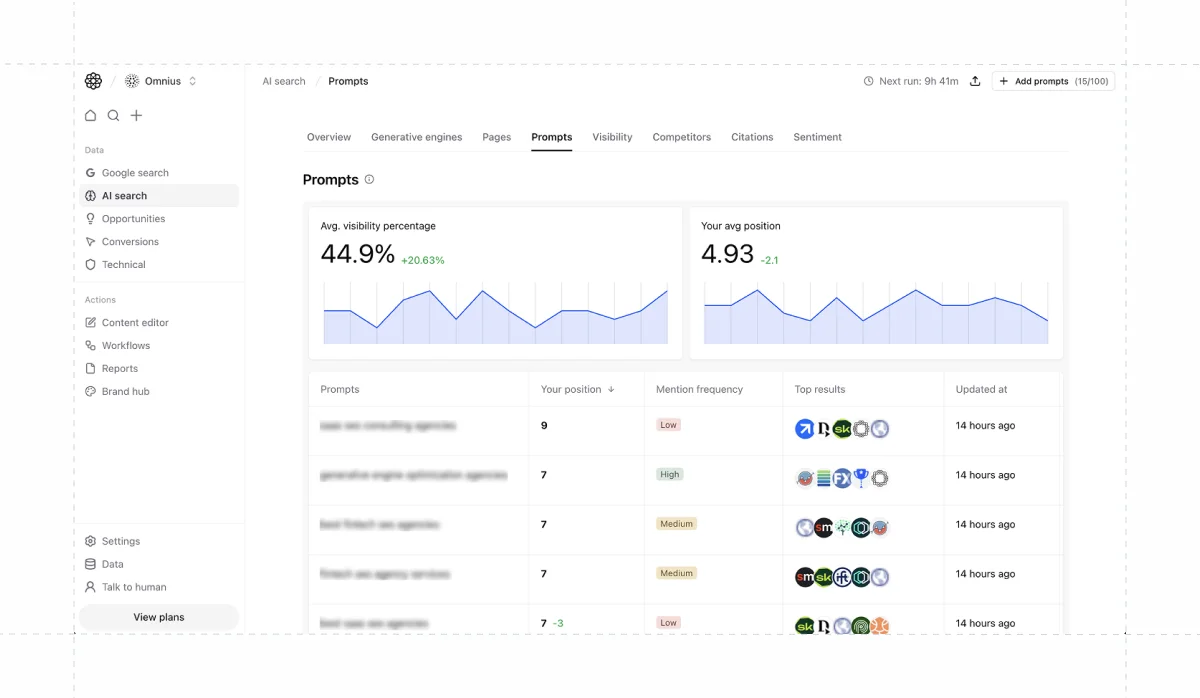

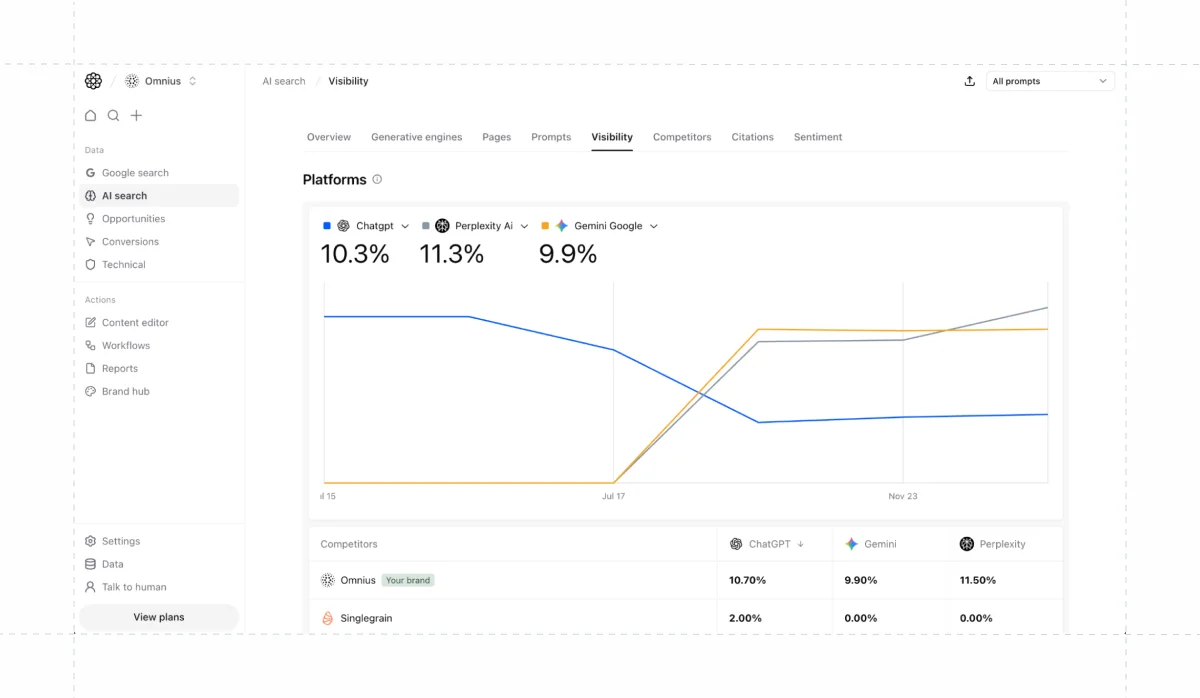

AI Visibility & Share of Voice (Synthetic)

This KPI captures how often your brand appears in AI-generated answers and how often it appears relative to competitors. It reflects your representation inside the model outputs - not user behavior, but model behavior.

This is the synthetic layer that explains “model perception”:

- how frequently your brand is mentioned

- how consistently you appear across prompts

- which engines include or exclude you

- how your visibility compares to your competitor set

How it is technically tracked:

- A structured prompt set is defined (category, use case, comparison, alternatives, pricing, integration prompts).

- Each prompt is run multiple times per engine to offset model nondeterminism.

Answers are parsed for brand mentions using entity extraction. - Mentions are averaged across all runs to produce a stable visibility score.

- Share of Voice is calculated as:

your mentions / total mentions of all brands inside the same prompt cluster

This is the AI equivalent of “rank + market share”—but for generative engines instead of SERPs.

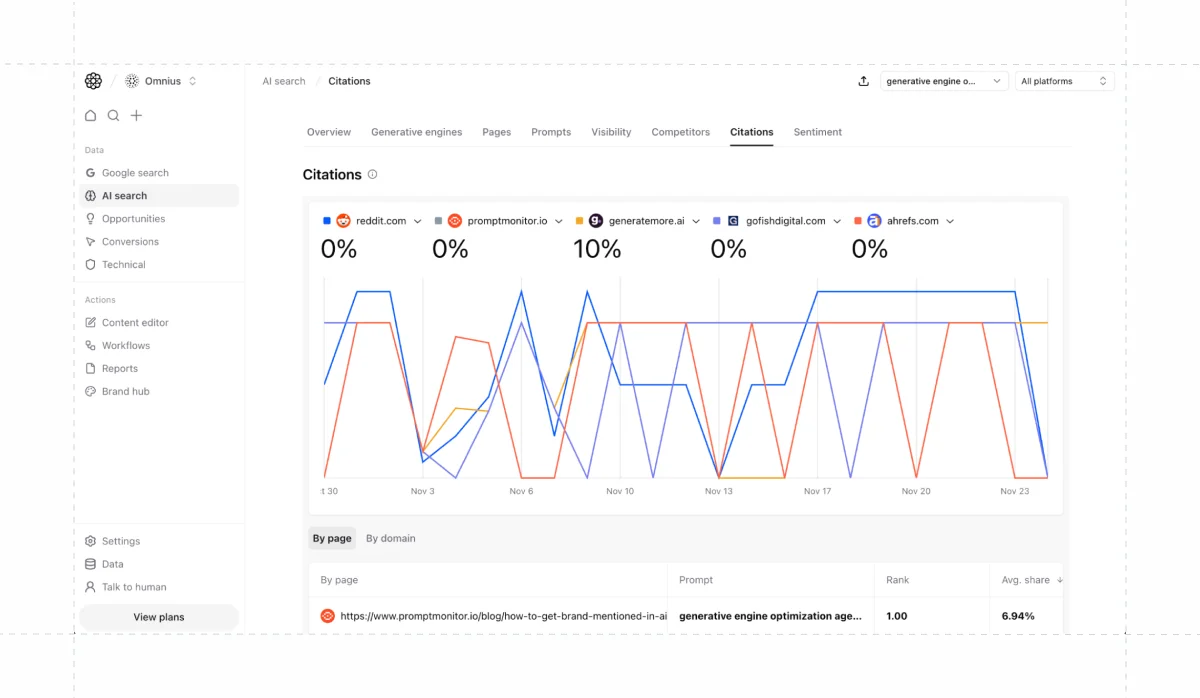

Citation rate and source-level quality

Visibility is appearance. Citations are the evidence behind that appearance. This KPI measures how often AI engines cite your domain or external sources connected to your brand.

How it is technically tracked:

- Each AI answer is parsed for outbound links, explicit citations, or referenced domains.

- All citations are mapped to:

- your own domain

- your subdirectories (docs, blog, product pages)

- third-party sources (reviews, analysts, communities)

- The model output is normalized across sampling runs and prompt clusters.

- You track the frequency, distribution, and quality of those citations.

A rising citation rate is one of the strongest indicators that LLMs trust your content.

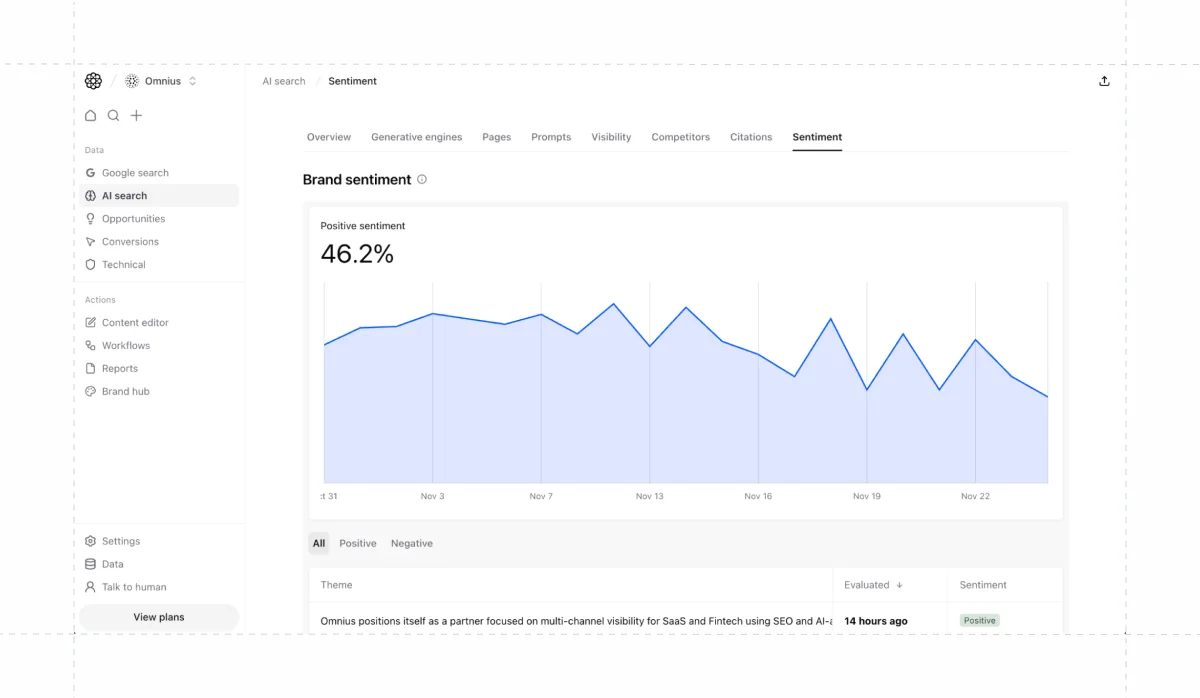

Sentiment accuracy & contextual framing

This KPI measures how the brand is described: positive, neutral, outdated, or inaccurate.

How it is technically tracked:

- Answers are broken into statements about your brand.

- NLP scoring (polarity, subjectivity) and rule-based checks identify sentiment patterns.

- A classifier flags outdated data (old pricing, deprecated features, wrong ICP).

- Contextual misclassifications are logged: wrong category, wrong positioning, wrong industry, etc.

This KPI highlights where LLMs misunderstand your brand and where content or documentation updates are required.

Platform coverage

Platform coverage tracks how consistent your visibility is across AI engines (ChatGPT, Gemini, Perplexity, Copilot, Claude, etc.).

How it is technically tracked:

- The same prompt set is run across all engines.

- Visibility, citation rate, and share of voice are calculated separately per engine.

- KPIs are mapped into a matrix to show where visibility is strong, weak, or absent.

This identifies platform-specific weaknesses where competitors may be significantly ahead.

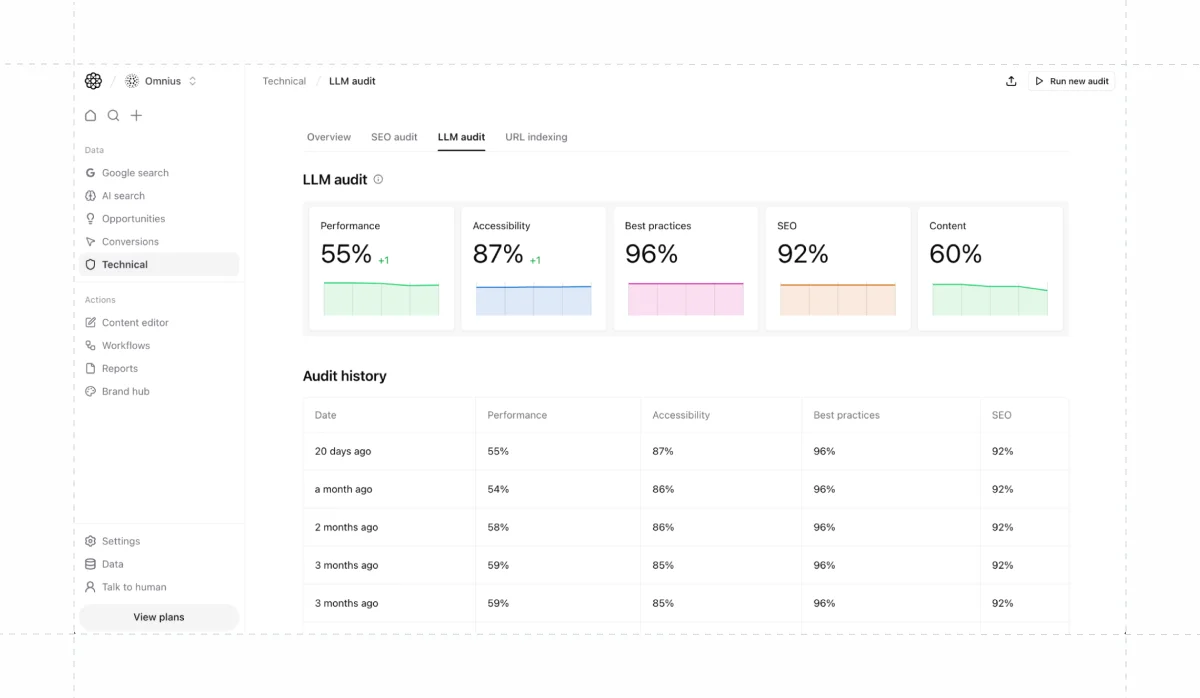

LLM Technical Health Score

AI engines rely on retrieval systems (RAG pipelines, search indexes, structured parsing) before generating answers. This KPI measures how “machine-readable” and AI-friendly your content ecosystem is.

How it is technically tracked:

- Structured data coverage (Organization, Product, SoftwareApplication, FAQ)

- Clean HTML hierarchy and entity consistency

- File structure and content clarity

- Page freshness and update intervals

- Crawlability, rendering, and load performance

- Coherence across product pages, docs, and marketing content

- NLP clarity signals (ambiguity, keyword noise, conflicting statements)

This is the LLM-aligned evolution of a technical SEO health score - optimized for AI retrieval, not SERP ranking.

AI Indexing Ratio

This KPI measures how many of your pages are actually used by AI engines - not just crawled or technically indexable.

How it is technically tracked:

- Count of unique URLs that:

- receive AI traffic, or

- are cited in AI outputs

- Divided by the total number of strategic pages/templates you want AI engines to use.

- Repeated across sampling periods to track expansion or stagnation.

This ratio shows which parts of your content inventory actually participate in AI search.

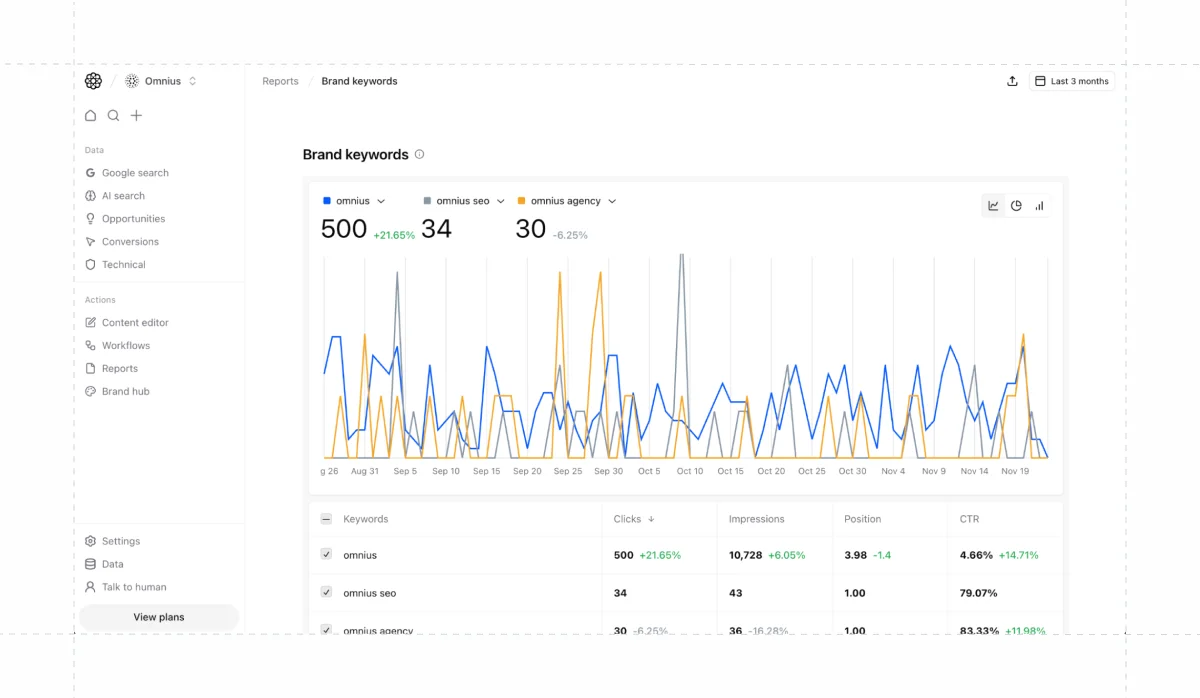

Branded Search

Branded search is a supporting KPI that helps you understand the downstream impact of AI visibility. Many users discover brands inside AI engines first, then go to Google to search the brand name directly.

For example, someone may ask ChatGPT for “best CRM for SaaS,” receive a shortlist, and then type each brand name into Google to evaluate them further. These sessions appear as branded organic clicks, even though the initial intent originated from an AI engine.

How it is tracked:

- Monitor branded impressions and branded clicks in Google Search Console.

- Track changes in branded CTR and growth in brand-name modifiers (pricing, review, integration, vs-queries).

- Compare trends against increases in AI visibility and share of voice to identify correlation.

Branded search does not replace synthetic AI visibility or behavioral AI metrics, but it provides an additional signal that your AI presence is influencing real demand, awareness, and consideration inside Google.

When AI visibility and share of voice move up and branded search follows, you have a strong, practical confirmation that AI engines are contributing to your search demand, even if analytics cannot attribute that path directly.

Conclusion

Search has evolved from a predictable, rank-based environment to a fragmented, probabilistic, multi-engine ecosystem.

Google still dominates transactional discovery, but LLMs now influence a rapidly growing portion of evaluation, comparison, and early-stage research. The way users form opinions has changed - and the way companies measure visibility must change with it.

The old workflow of checking GSC impressions and calling it a day no longer reflects how people find brands. AI engines generate answers that differ by prompt, model version, recency window, context, and user behavior.

They cite different sources, favor different content structures, and update outputs daily. Companies that continue relying on Google-only metrics are operating with an incomplete understanding of how they are perceived in the market.

Modern visibility requires two equally important layers:

- Behavioral evidence (real traffic, real conversions, real signals)

- Synthetic model sampling (how LLMs describe the brand, what they cite, where they include or exclude it)

This dual tracking is the baseline for objectivity. Without both, teams cannot see visibility gaps, cannot understand why LLMs fail to mention them, and cannot identify AI-driven demand that later appears as branded search inside Google.

The KPI layer you track ultimately determines the accuracy of the decisions you make. AI visibility, share of voice, citation quality, sentiment accuracy, platform coverage, technical LLM health, indexing ratios, and branded search are the new operational metrics that reflect how discovery actually works in 2026.

The companies that adapt early will have a structural advantage: they will understand how they are represented across engines, fix misalignments faster, strengthen the sources models rely on, and capture the demand shifts others cannot even see.

One last note

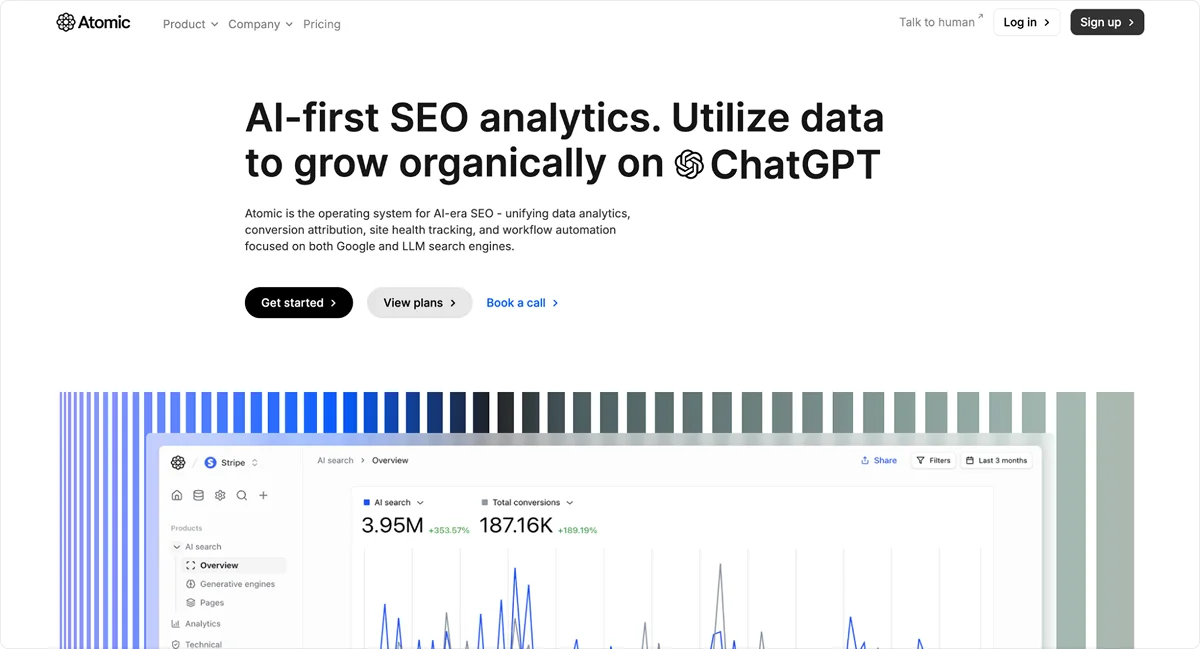

Almost every example in this article comes from Atomic AGI - AI-first SEO analytics platform we built internally at Omnius over the last two years.

The reason is simple: in 2023, it became obvious that whoever owns the data will own the market. At the time, the Google + AI search ecosystem was expanding fast, and the early signals were clear in our work with some of the best SaaS, Fintech & AI companies.

SEO was inevitably changing, but most of the industry was still responding with repackaged services and new acronyms - AEO, GEO, AIO, without any real understanding of how AI search actually behaved.

The problem was easy to see: agencies were selling the promise of "AI search optimisation," while the tools they relied on were mostly synthetic-only dashboards - sampling a few ChatGPT or Perplexity answers and turning them into visibility charts full of false positives.

In reality, 90% of these tools were built on synthetic data modelling with no connection to real user behavior, no grounding in evidence, and no understanding of model volatility. It was snake-oil territory, and the market was crowded with quick opportunists.

We decided to take a very different approach.

Our logic was: if SEO is becoming multi-engine and probabilistic, we need a technology layer that measures it properly. Not theory. Not speculation. Actual data.

So we built Atomic AGI around three principles:

- More comprehensive (multiple engines)

- More precise & closer to real-time

- Centralised, not siloed (as there's lots of value from the interconnectivity of all of that data).

That was two years ago.

Since then, Atomic AGI has helped us identify what actually works in AI search, what doesn’t, and what is pure industry myth. Based on thousands of experiments, we developed the first repeatable AI Search Optimisation protocols and launched them as a service in early 2025.

Today, Omnius is recognised as one of the leading companies in multi-engine SEO for SaaS and Fintech - and one of the very few agencies globally that also owns the underlying technology layer, which is now one of our strongest differentiators.

The principle remains the same:

Own the data > own the market.

And in a world where discovery is no longer controlled by one engine, but by a distributed network of models, understanding how these systems interpret your brand is no longer optional. It is the new foundation of organic visibility.

This article is built on that foundation.

.png)

.svg)

.svg)

.png)

.png)