The current conversation around artificial intelligence is a noisy mix of apocalypse, utopia, and corporate FOMO. Understanding the state of AI in 2025 requires focusing on historical patterns, market economics, and observable behaviour - not speculative forecasts.

This report organizes the evidence into a set of lessons about what AI is, what it is not, and what the emerging market structure suggests about the next decade.

Note: This report is heavily informed by AI Eats the World, a lecture by Benedict Evans, one of the most respected technology analysts of the past decade, known for his work at Andreessen Horowitz and his widely cited essays on industry structure, platforms, and technological adoption cycles.

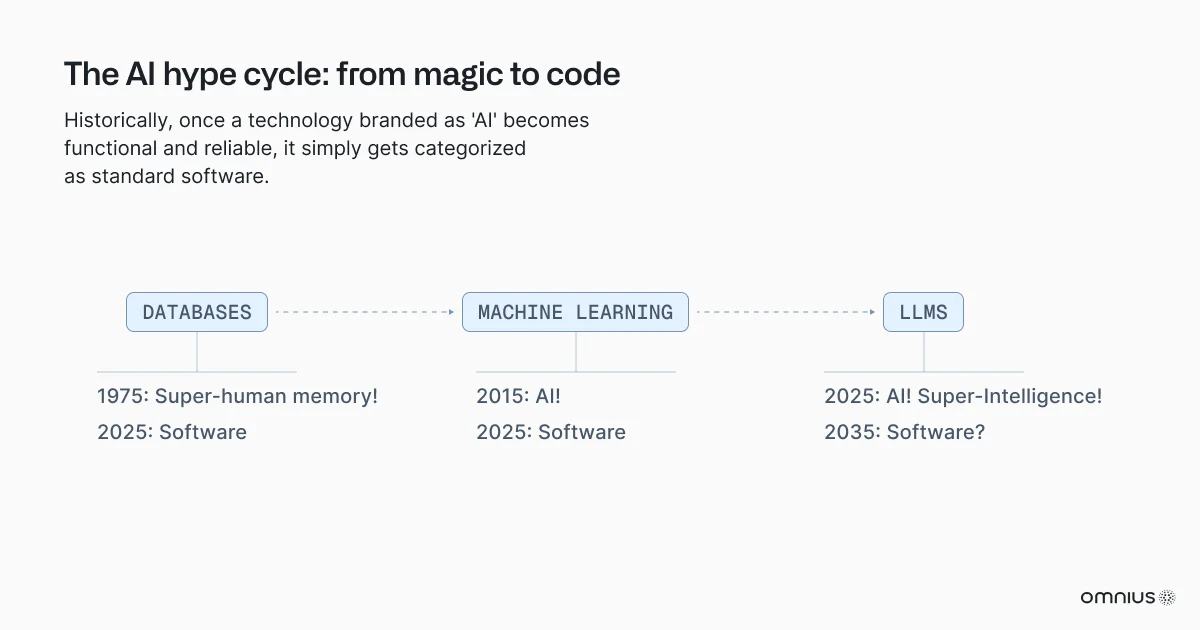

Lesson 1: AI is whatever machines can’t do yet

Larry Tesler’s 1970 observation remains accurate: “AI is whatever machines can’t do yet.” Once a capability works reliably, it stops being called “AI” and becomes “software.”

History shows this clearly.

In the 1970s, databases were seen as systems with superhuman memory and triggered worries about machine dominance. Today, they are basic, often legacy, infrastructure.

Fifteen years ago, photo recognition on a phone would have looked like science fiction.

Ten years ago, it was labeled AI.

Today, it is standard smartphone functionality.

Large language models currently sit in that transitional zone. We call them AI because they are still unstable, expensive, and not fully integrated. When these systems function reliably and predictably, the industry will reclassify them from AI to software.

Lesson 2: The scale of shift is unknown

Two main frameworks try to describe how significant generative AI really is, and current evidence does not allow us to choose between them.

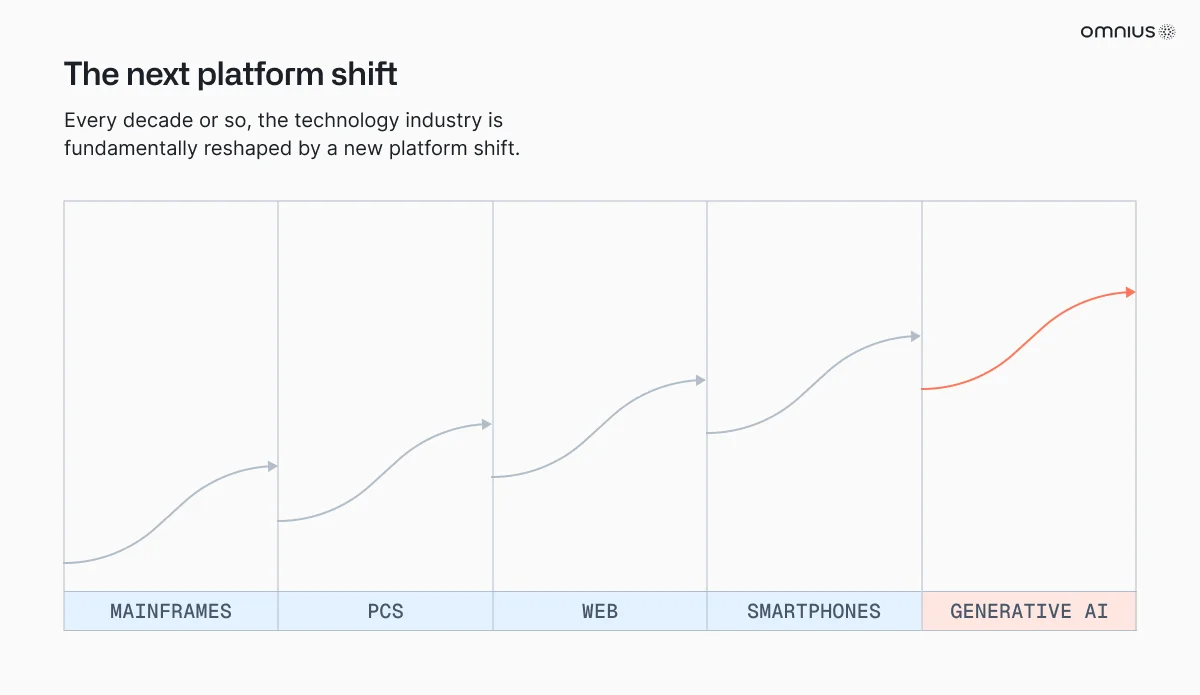

The platform shift framework

Technology has historically evolved in 10–15 year cycles:

Mainframes > PCs > the web > smartphones.

In this view, AI is the next foundation layer for software development and interfaces over the coming decade. It changes how we build and use software, but fits within the existing pattern.

The discontinuity framework

Figures like Bill Gates and Sergey Brin treat generative AI as a more fundamental break, comparable to electricity or computing itself. Gates has called it the most important development since the graphical user interface. In this frame, AI is not just another platform but a step-change in what can be automated or delegated.

The outcome range is wide:

- One end: “millions of specialized models” embedded into workflows, similar to how spreadsheets proliferated.

- Other end: a more unified system that can execute complex, multi-jurisdictional tasks on command (e.g., “Handle my taxes across five countries”), coordinating many steps automatically.

Current data does not distinguish clearly between these trajectories.

Marvin Minsky once predicted that machines with general human-level intelligence would arrive in 3-8 years. Decades later, that estimate is still “3–8 years away.” The uncertainty around scale is a constant.

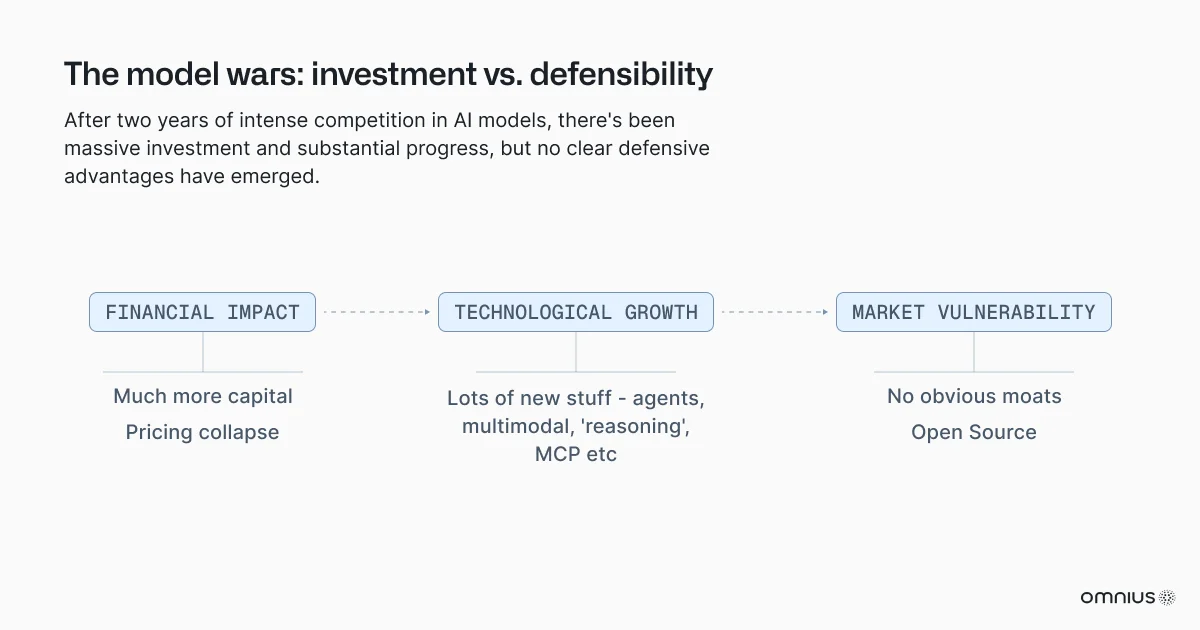

Lesson 3: Capital intensity doesn’t create defensible moats

The capital requirements of this cycle are unprecedented, but capital alone has not produced durable moats.

- The big four platform companies spent ~$220 billion on data center infrastructure in 2024.

- Projected 2025 spending exceeds $300 billion.

- Microsoft now allocates 30%+ of its revenue to capex, roughly double the rate of many capital-intensive telecom companies.

- Nvidia generates $45+ billion per quarter from AI chips, effectively operating as a high-margin, high-volume manufacturing and infrastructure supplier.

Despite this, no clear competitive moat has formed at the model layer. DeepSeek showed that ~$500 million is enough to build a frontier-grade model. That is a huge number for an individual, but it is well within reach for large technology firms, major funds, or state-backed actors.

OpenAI’s initial performance lead has narrowed. Multiple models are now “good enough” at the frontier. The result: massive capital intensity without equally massive barriers to entry.

Lesson 4: Models are commoditizing

Model development today looks a lot like the PC industry in the early 1990s. At that time, people debated processor speeds and modem specifications. Over time, those details became invisible; the hardware became a commodity.

The current focus on benchmark charts for Gemini vs Claude vs GPT vs Llama fits the same pattern. Underneath that:

- Model costs are falling by one to two orders of magnitude per year.

- LLMs are simultaneously the most expensive and fastest-depreciating assets in recent tech history.

- Most frontier models now produce similar results for most practical use cases.

For many tasks, you can use the top model or a 95% equivalent running at ~5% of the cost, sometimes on consumer hardware.

What has not commoditized is brand:

- ChatGPT dominates app store rankings and search trends by a wide margin.

- Perplexity generates a lot of industry discussion but barely shows up in consumer awareness data.

ChatGPT is becoming a verb, but it isn’t guaranteed to follow Google’s trajectory - it could also follow MySpace’s: widely known early, but eventually displaced if value shifts elsewhere in the stack. The main differentiation is already moving from model engineering to the application and integration layers.

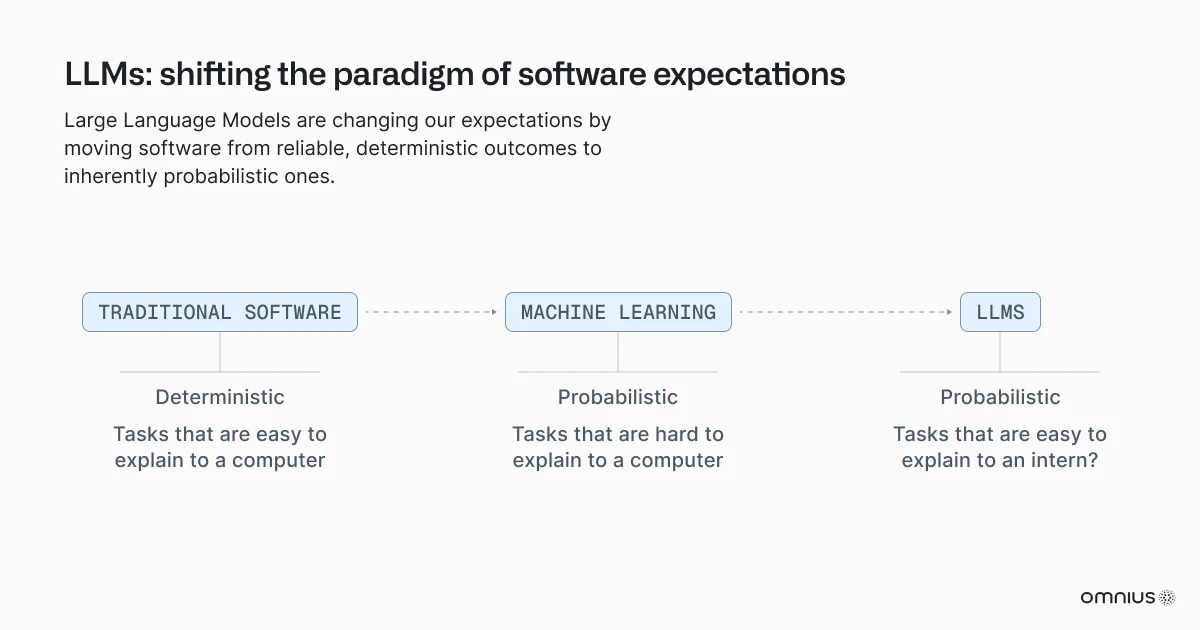

Lesson 5: Software architecture is shifting from Deterministic to Probabilistic

Traditional software is deterministic. You define the logic, the system executes it, and the same input always produces the same output. Mortgage calculations, bank transactions, database queries - these all rely on deterministic guarantees.

Machine learning introduced probabilistic methods for tasks that are hard to encode as rules: fraud patterns, language translation, and image recognition.

Large language models extend probabilistic behavior into domains that were previously handled through explicit instructions and strict business logic. They produce “probably correct” answers, not guaranteed ones.

A practical heuristic:

- If a task can be explained to a capable intern in 30 seconds, and you’d expect a decent result the next day, an LLM can likely handle it.

- If a task requires tacit knowledge, incremental learning, or weeks of observing how decisions are made, current LLMs will struggle. Not because the domain is impossible, but because the full context cannot be compressed into a short prompt.

This raises two key architectural questions that are still unresolved:

- Do LLMs orchestrate and control traditional software systems?

- Or do they act as one API among many inside a more traditional architecture?

Current implementations explore both patterns.

No stable standard has emerged yet.

Lesson 6: Mainstream Adoption Is Limited Despite Easy Access

Technical availability is high; mainstream usage is not.

Survey data from Q4 2024 suggests:

- 7–10% of people use generative AI chatbots daily.

- Another ~20% use them weekly or bi-weekly.

- Around 50% tried once, didn't see a clear use case, got unexpected or low-quality answers, and stopped.

This is the “blank screen problem”: people don’t know what to ask or how to translate their work into prompts.

The VisiCalc analogy is instructive:

- Accountants in the late 1970s immediately saw that spreadsheets could compress a week’s work into minutes.

- Lawyers seeing the same demo concluded: “Interesting, but irrelevant to my work.”

The same split is visible today.

- High-adoption categories: software development (code, tests, documentation), marketing (copy, outlines, variants), and some operational workflows.

- Interested but cautious: customer support, where incorrect but confident answers create risk.

- Early adopters: people already comfortable with tools like Airtable and no-code platforms use ChatGPT, Claude, Perplexity, and Gemini regularly.

For most users, the situation is still: they know generative AI exists, but don’t know how it fits into their day-to-day work.

Code generation stands out as the clearest, proven productivity gain. It meaningfully reduces deployment time and startup formation costs. But it is one strong use case, not a universal transformation.

Lesson 7: Enterprise deployment is blocked by execution, not imagination

Enterprises do not lack ideas about how AI could be used. They struggle with implementation.

Commonly reported barriers include:

- Implementation and integration complexity

- Security and compliance requirements

- Limited in-house expertise

- Data governance concerns

- Insufficient data infrastructure

“Understanding use cases” consistently ranks low as a reason to delay adoption.

At the same time:

- Accenture reported $1.4 billion in generative AI bookings in Q4 2024. Some of this is almost certainly AI-washing, renaming existing projects to fit the trend - but not all. It indicates serious enterprise experimentation.

- Around 25% of large enterprises report having at least one production AI deployment.

- About 40% say they do not expect to deploy anything meaningful before 2026 or later.

Cloud computing offers a clear precedent. After more than 15 years, cloud infrastructure still runs only 20-30% of enterprise workflows. Adoption of new technology takes years, regardless of how capable it is.

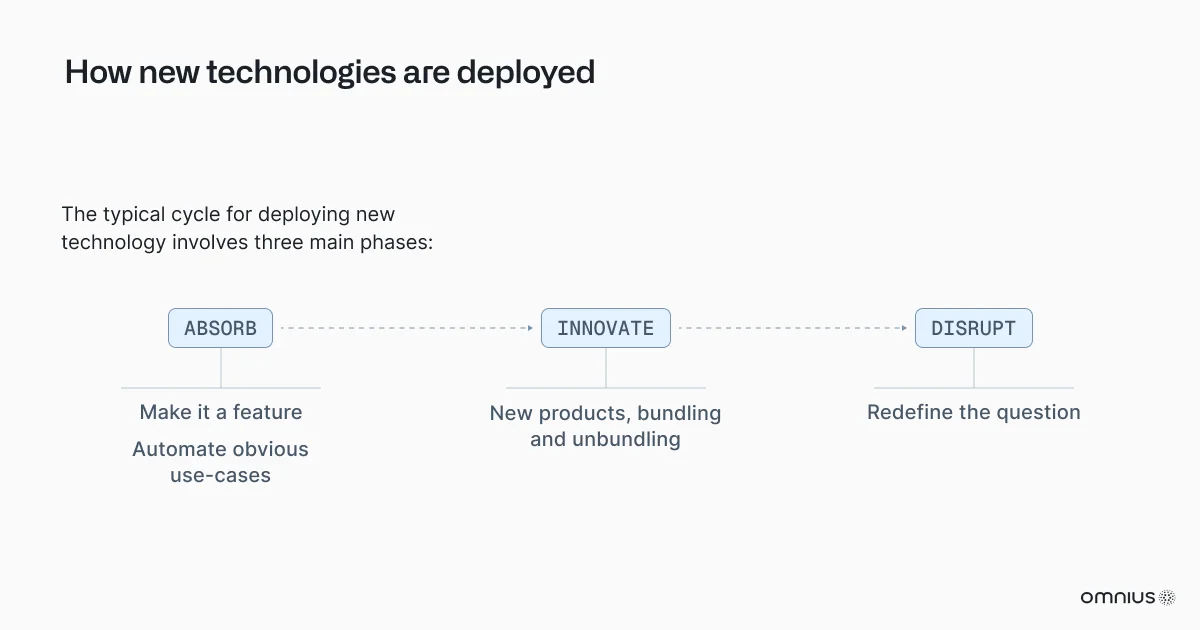

Lesson 8: Deployment follows predictable phases

Like previous platform shifts, AI is moving through distinct deployment phases:

Phase 1: Absorption and Automation

Incumbents bolt AI onto existing products and workflows. They automate obvious tasks with measurable ROI. This is bottom-line innovation: cost reduction and efficiency.

Phase 2: Innovation and Unbundling

New applications appear that were not possible before. Startups target specific, high-value problems. This is top-line innovation: new revenue, new products, new categories. Incumbents begin to be unbundled.

Phase 3: Disruption (Conditional)

The fundamental questions and interaction patterns change. Airbnb did not simply digitize hotel booking—it changed what “accommodation” means.

Today, AI is in late Phase 1 and early Phase 2.

We see:

- Vertical specialization: startups solving narrow but deep problems like COBOL→Java conversion, telecom billing reconfiguration, and invoice reconciliation.

- YC cohorts that are almost entirely AI-themed.

This is still software solving well-defined problems, not a wholesale restructuring of the economy.

Lesson 9: Real disruption happens when questions change, not just answers

Most discussion about AI “disrupting search” focuses on answers: users asking ChatGPT instead of Google, reducing search traffic and ad clicks.

The more important pattern is changing the question.

- Traditional search: user types a keyword query, receives a list of links.

- AI-mediated interaction: user provides context (text, image, history) and receives a direct suggestion or action.

Example: sending a photo of your fridge and asking, “What should I cook?”`

That is not a keyword query. It doesn’t fit the current search ad model. There are no obvious “sponsored results” in that interaction.

This matters because:

- Global advertising spend is around $1 trillion per year.

- Roughly half of that goes to Google, Meta, and Amazon.

- A large portion of that spend depends on traditional search behavior.

If interaction patterns shift from keywords and link lists to context and direct answers, the entire value chain may need to be rebuilt.

The same dynamic appears outside search:

- In robotics, core questions are often about logistics and operations, not AI algorithms.

- In media and Hollywood, the impact depends on production workflows, contracts, and distribution, not just generative models.

- In finance and healthcare, regulatory and domain structures matter as much as model capabilities.

AI is an input; the real disruption depends on how industries are organized.

Lesson 10: Most current use cases are incremental, not transformative

The highest-adoption use cases today are straightforward:

- Software development: code, tests, refactoring, documentation

- Marketing: copy drafting, variations, research summaries

- Customer support: draft responses, summarization, routing

- Operations and finance: invoice reconciliation, data extraction, basic reporting

These are useful and often high-ROI, but they are not new industries. They are incremental improvements in known workflows.

Disruption will not look uniform across sectors:

- Uber was destructive disruption for taxis.

- Airbnb was mostly additive disruption, expanding the accommodation market.

AI will likely produce a mix of effects - destructive in some areas, additive in others, marginal in many. There will not be a single pattern for “AI disruption.”

Lesson 11: All AI strategy questions collapse into two types

After several years of AI being discussed at almost every conference and board meeting, most questions fall into two categories.

Category 1 – Standard platform shift questions

Examples:

- Should we build on Microsoft, Google, or an independent provider?

- Should we hire Accenture, Bain, or build internally?

- Which business units should we start with?

These questions resemble previous transitions (web, mobile, cloud). Standard evaluation frameworks apply: vendor risk, cost, talent, time-to-market.

Category 2 – Genuine unknowns

Examples:

- Where will value capture concentrate?

- Which business models will be defensible?

- What will the dominant market structure look like?

In 1995, nobody knew that:

- The internet would become the dominant platform.

- The web would be the main interface.

- Value would concentrate in search advertising and social networks.

We are in a similar position today. Everyone agrees that “AI” will matter; nobody can say with confidence where the durable profit pools will be.

Lesson 12: The industry funds the past while talking about the future

Industry narrative and industry revenue are decoupled.

Narrative focuses on:

- AI

- Robotics

- Space

- Crypto

- Nuclear

Revenue still comes mostly from:

- E-commerce

- SaaS and enterprise software

- Streaming and digital media

- Mobile and workflow tools

After decades of rollout:

- E-commerce has reached 20-30% of global retail.

- Cloud runs 20-30% of enterprise workloads.

- Autonomous vehicles are only now approaching practical deployment in limited contexts.

Older technologies continue to expand and generate most of the money while the industry discusses newer ones.

AI will follow the same pattern: research first, then long deployment cycles. By the time LLMs are deeply embedded and boring, attention will likely have shifted to another frontier topic.

Observable Data Points (2024–2025)

- Big four platforms: $300+ billion projected 2025 infrastructure spend

- Nvidia: $45+ billion quarterly revenue from AI chips

- Microsoft: 30%+ of revenue allocated to capex (vs. ~15% for typical telcos)

- Model building: ~$500 million establishes frontier-level capability

- Daily chatbot usage: 7–10% of population

- Weekly+ chatbot usage: ~30% of population

- Enterprise cloud adoption: 20–30% of workflows after 15+ years

- Enterprise AI production deployments: ~25% of large companies

- Accenture Q4 2024 generative AI bookings: $1.4 billion

- Global advertising: ~$1 trillion annually, ~50% to Google, Meta, Amazon

- E-commerce penetration: 20–30% of global retail

- Recent YC cohorts: near-100% AI-branded startups

Conclusion

Three years after ChatGPT, AI looks less like a singular revolution and more like a powerful new layer in a familiar pattern: rapid capability growth, heavy investment, slow and uneven deployment, and unclear long-term market structure.

Key observations:

- Models are commoditizing faster than most expected.

- Capital intensity is huge, but moats at the model layer are thin.

- Real value is emerging in specific, often mundane, workflows.

- Consumer and enterprise adoption patterns follow historical precedent.

- The questions that matter most - where value will concentrate and how markets will be structured - remain unresolved.

The current position is early in a long deployment cycle, with clear near-term wins in software development, marketing, and process automation, and high uncertainty about where the deepest long-term value will accrue.

.png)

.svg)

.svg)

.png)

.png)