The way people find information online is going through its biggest shift in decades. For more than 20 years, the goal of SEO was simple: rank on Google’s blue links and win clicks.

But in 2025, that model is breaking down fast. Users are no longer relying only on traditional search engines. Instead, they’re getting answers directly from AI-driven platforms like ChatGPT, Perplexity, Claude, Bing Copilot, and Google’s AI Overviews.

These platforms don’t just display links. They generate conversational summaries, often citing sources, but sometimes giving answers without attribution. That raises a critical question for every company:

How do you make sure AI systems can find, understand, and cite your content?

Traditional SEO still matters; fast websites, clean architecture, and structured data are non-negotiable. But AI systems don’t work like Google. Large language models (LLMs) consume content differently: they ingest text, break it into tokens, and then reassemble those tokens into synthesized answers. The crawlers powering these models are also less advanced than Googlebot, which means a site that looks fine in Google Search might be invisible to an AI engine.

This is where Generative Engine Optimization (GEO) and Answer Engine Optimization (AEO) services come in. GEO is about making sure your content is discoverable and consumable for AI models, while AEO is about structuring content so these systems can extract concise, fact based answers. Together, they form the technical foundation of visibility in the AI era.

For SaaS and fintech companies, industries where authority and trust are essential, this isn’t optional. If your research, documentation, or thought leadership isn’t machine-readable, AI engines may skip over it. Worse, they may cite your competitors instead.

That’s why this article walks through how to technically optimize your website for GEO and AEO in 2025, from crawlability and structured data to modular content design and zero-click branding. The goal isn’t just to stay visible, it’s to become the answer that AI engines deliver.

Why is technical optimization critical for AI search?

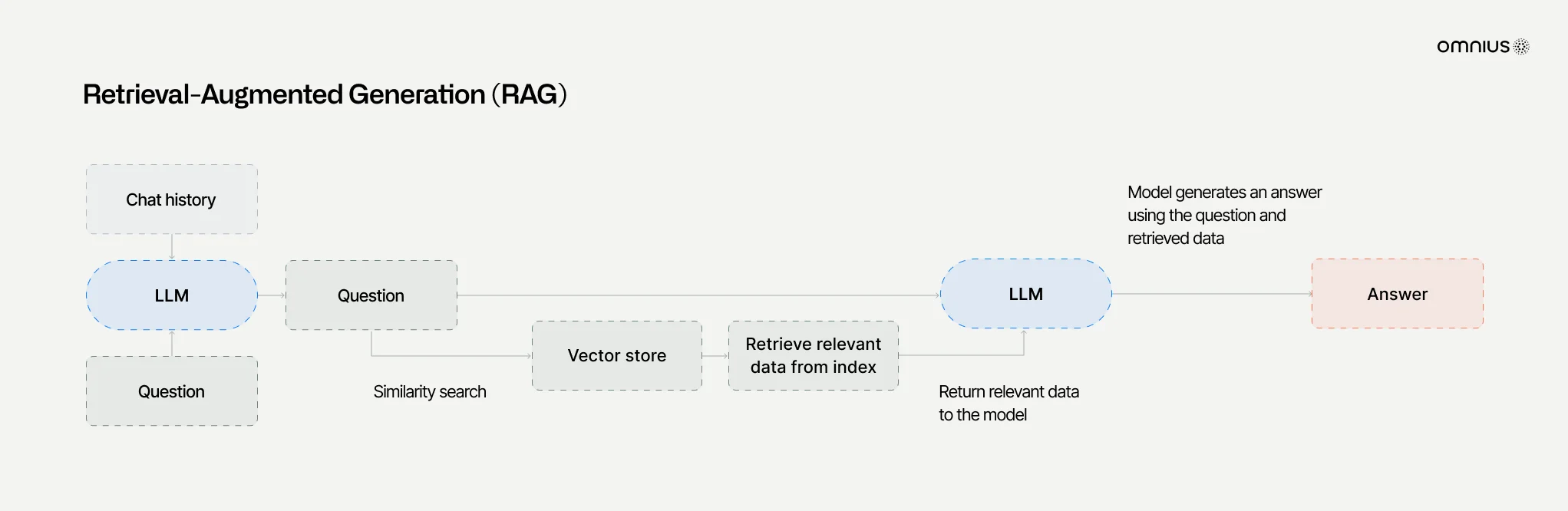

Before jumping into tactics, it’s important to understand how AI search engines like ChatGPT, Perplexity, Bing Copilot, and Claude actually process your content. Unlike Google’s PageRank era algorithm that ranked whole pages, today’s AI platforms work with a hybrid model that blends retrieval and generation.

Most of them follow a Retrieval-Augmented Generation (RAG) pipeline:

1. Fetch – The AI system queries its index or the live web, often using a simplified crawler (e.g., GPTBot, PerplexityBot, or Bing’s AI crawler).

2. Parse – The crawler breaks the page into smaller chunks. Clean HTML, modular sections, and minimal JavaScript make this step easier.

3. Generate – The model synthesizes a natural-language answer, sometimes citing sources (Perplexity nearly always does; ChatGPT may or may not, depending on mode).

The growing importance of technical optimization of websites comes from a very simple fact tied to the first step from the RAG process - AI crawlers are far less advanced than Traditional SEO ones.

Googlebot has decades of engineering behind it. It can handle JavaScript rendering, recover from server errors, and crawl deep site structures.

AI crawlers like GPTBot or PerplexityBot are lightweight. They often fail on JavaScript-heavy pages, gated resources, or complex navigation. If they hit technical barriers, they simply skip your content.

In the past, a site could get away with weaker technical SEO because Google was able to “figure it out.” That’s no longer true. With AI engines, technical optimization is no longer optional, it’s the difference between being included in answers or being invisible.

This shift makes technical SEO (focused on AI discoverability, as well) more important than ever. Clean architecture, error-free delivery, and machine-friendly markup directly determine whether your content even enters the AI answer pool.

How to optimize websites technically for LLM search

Now that we’ve covered how LLM-based search engines fetch, parse, and generate answers, the next step is understanding how to make your site technically ready for them. Unlike Google, these crawlers are less advanced, which makes technical optimization a non negotiable foundation of GEO and AEO.

In this article, we’ll walk through the key technical strategies that ensure your content can actually be discovered, parsed, and cited by AI systems. Specifically, we’ll cover:

1. Crawlability & indexation – making sure AI crawlers can access and read your site without friction

2. Content architecture & design – structuring content in modular, machine-friendly ways

3. Structured data & semantic HTML – adding schema and clean markup so machines understand your content

4. Authoritativeness & trust signals – embedding visible and structured credibility markers and using an AI text checker to ensure your content’s originality and authenticity

5. Performance & accessibility – optimizing speed, stability, and rendering for lightweight crawlers

Let’s start with the most fundamental layer.

1. Crawlability & Indexation for AI Bots

AI engines cannot use your content if they cannot crawl it. This sounds obvious, but it’s where many websites fail. Unlike Googlebot, which has been fine-tuned over decades, AI crawlers are still early-stage. They’re less tolerant of errors, they struggle with JavaScript-heavy pages, and they often give up where Googlebot would persist.

That’s why crawlability and indexation are the single most important foundations of GEO and AEO. If AI crawlers can’t see your content, they can’t parse it, and they won’t cite it.

2. Allow AI-specific user agents

Just as SEO has always required allowing Googlebot, GEO requires enabling the new generation of AI crawlers. The most common ones include:

- OpenAI: GPTBot , OAI-SearchBot , ChatGPT-User

- Anthropic: ClaudeBot

- Perplexity: PerplexityBot

- Google: GoogleOther , Google-Extended (controls AI/SGE use)

- Microsoft: Bing AI crawlers

The minimum step is to explicitly allow these bots in your robots.txt .

Example configuration:

User-agent: GPTBot

Allow: /

User-agent: PerplexityBot

Allow: /

User-agent: ClaudeBot

Allow: /

User-agent: Google-Extended

Allow: /

Failing to do this can mean invisibility in AI search, even if you rank well on Google.

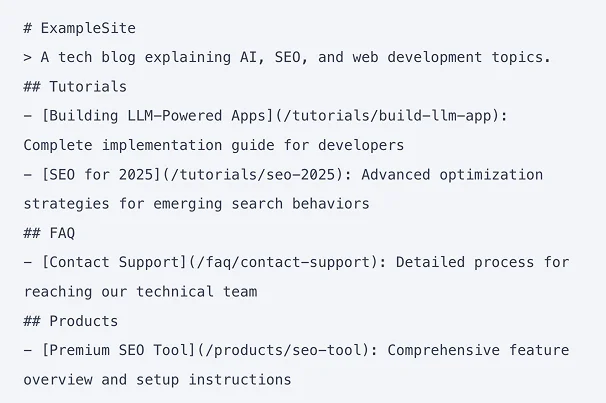

3. Experiment with llms.txt

A new standard, llms.txt, is emerging as the equivalent of robots.txt for AI. It lets site owners define how their content can be used for training, citation, or exclusion. Adoption isn’t universal yet, and compliance is voluntary, but it’s worth implementing now to stay ahead.

Best practices include:

- Stating whether content may be cited in AI answers

- Defining training vs non-training permissions

- Setting attribution rules (brand, URL, snippet length)

Here’s an example of it:

While it won’t stop stealth crawlers, it sends a clear signal to the compliant ones.

4. CDN and firewall whitelisting

Many AI crawlers are accidentally blocked at the CDN/WAF layer (Cloudflare, Akamai, Fastly). SaaS and fintech sites often enforce strict bot protections, which is great for security but can lock out legitimate AI engines.

To avoid this, whitelist known AI crawler IP ranges and monitor firewall logs. This ensures real crawlers aren’t lumped in with scrapers.

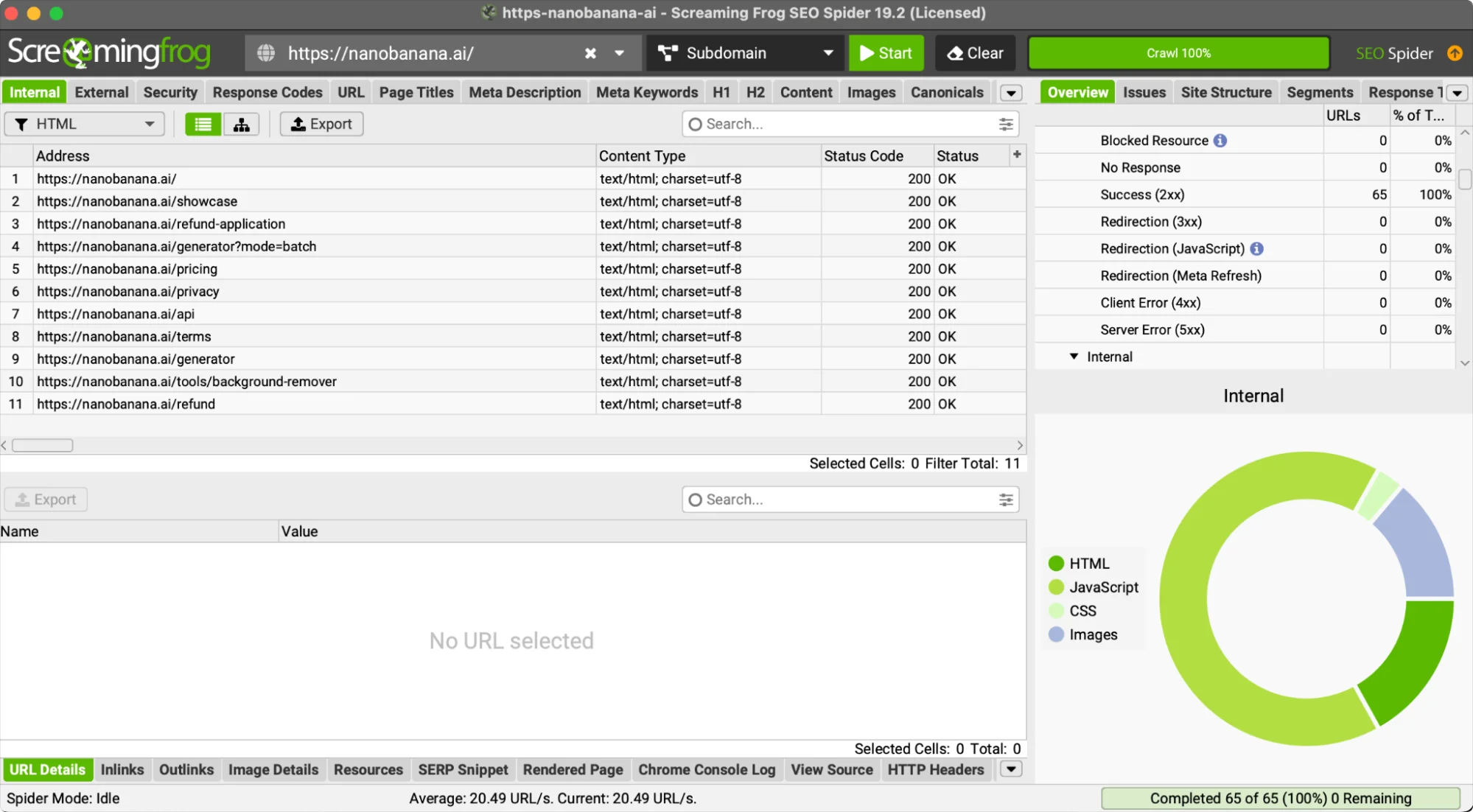

5. Status codes and error hygiene

AI crawlers are less forgiving than Googlebot when it comes to server errors. If they hit too many broken links, 5xx errors, or redirect loops, they’ll drop your site.

Best practices:

- Ensure consistent 200 OK responses for all canonical URLs

- Fix or redirect 4xx errors

- Audit with server log analysis to confirm AI bots are seeing healthy status codes

For this purpose, you can use Screaming Frog to make sure your website is error-free:

6. Sitemaps and real-time signals

Finally, make discovery as easy as possible:

- Provide comprehensive XML sitemaps (news, videos, products)

- Use IndexNow for real-time URL updates (increasingly adopted by Bing and AI-first engines)

- Include lastmod tags to highlight content recency

Together, these steps reduce the chance your new or updated content gets missed by generative engines. Proactively, companies create the baseline of accessibility that GEO/AEO requires.

Although we have been hearing repeatedly in the past years that Technical SEO isn't worth your time, this type of optimization became the critical component of both GEO and SEO.** A slow, error-prone, or poorly configured site won’t just hurt SEO rankings anymore - it will make you invisible in AI answers.

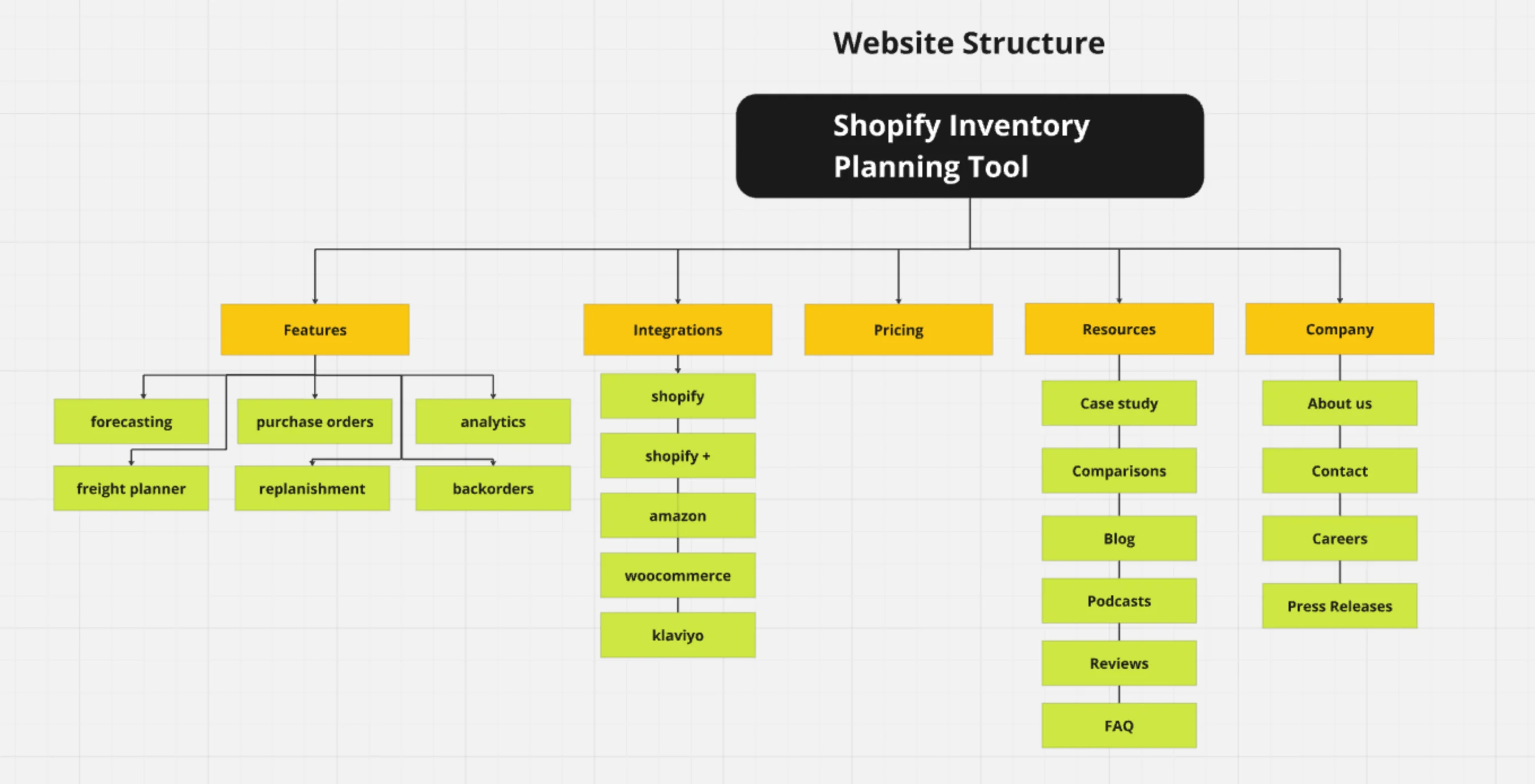

Content Architecture & Information Design

Even if AI crawlers can reach your site, poor content architecture can still hold you back. Remember: LLMs don’t read an entire page like a human. They break pages into chunks, then select the most relevant passages to generate an answer. If your content isn’t structured in a way that makes these chunks clear, you reduce your chances of being cited.

This is why your site’s information design has to work not just for users, but also for machines. The goal is to make content modular, scannable, and extractable.

1. Flat information architecture

AI crawlers are less persistent than Googlebot when navigating deep site structures. If critical content is buried too many layers down, it may never be indexed.

Good practice:

- Keep important pages within 2–3 clicks of the homepage

- Avoid deep nesting or “orphan” pages

- Use breadcrumbs and clear navigation to expose hierarchy

2. Internal linking & topical clusters

AI engines look for context across your site, not just isolated passages. Strong internal linking signals authority and helps crawlers understand topical relationships.

- Build topic clusters (pillar pages supported by subpages)

- Use descriptive anchor text that mirrors natural queries

- Interlink related resources to reinforce context

This isn’t just for humans - it makes it easier for AI to see you as an authority on a subject.

3. Modular content & chunking

Generative engines love modular content they can lift directly into an answer. Long, unbroken text blocks are often skipped.

Aim for:

- Sections of 300–400 words (≈300–500 tokens)

- Short paragraphs, lists, and callout boxes for clarity

- Each section focused on one main idea or question

This mirrors how Wikipedia is structured - one of the most commonly cited sources in AI generated answers.

4. Heading strategy & natural-language questions

Headings aren’t just for design; they’re signals for machines. AI systems use them to understand what a section is about.

- One H1 per page

- Use H2s and H3s as natural questions, not vague phrases (e.g., instead of “Financing Models,” use “How does revenue-based financing work?”)

- Add tables of contents or jump links on long-form pages to improve scannability

5. Descriptive URLs & clean markup

Technical hygiene matters here too. Short, descriptive URLs and clean semantic HTML help AI systems parse content more effectively.

Good practice:

- Keep URLs short and keyword-aligned

- Avoid messy parameters or hash fragments

- Use semantic HTML ( <article> , <section> , <aside> ) instead of generic <div> wrappers

A good learning here would be to simply design content the way AI reads it - in an NLP friendly way. Clean hierarchy, modular sections, and natural question-based headings don’t just help users - they make it far more likely your passages will be chosen for generative answers.

Structured Data, Schema & Semantic HTML

AI engines don’t just “read” your text. They rely on underlying signals, structured data, and semantic markup to understand who wrote it, what it’s about, and whether it can be trusted. Schema and semantic HTML act as a translation layer between your content and machines.

Done right, they increase the likelihood that your brand is cited accurately in AI-generated answers.

Why schema matters for AI search

Traditional SEO has long used schema to boost rich snippets. In the AI era, it goes further. Schema gives models explicit machine-readable context:

- Who authored the page

- When it was published or last updated

- What entities (company, product, person, place) are involved

- Why is the content credible

This helps prevent misattribution and ensures that when your content is quoted, it carries your brand with it.

Core schema types to implement

Not every schema type is relevant for GEO/AEO, but a few are essential:

1. Article – with author , datePublished , and dateModified

2. FAQPage – for Q&A content that maps to natural queries

3. HowTo – for step-based guides

4. Product – with specs, price, and availability

5. Organization – for company details (logo, social handles, founding date) 6. Person – for author bios and expertise

Semantic HTML for machine parsing

Schema is powerful, but it works best when combined with semantic HTML. AI crawlers are less advanced than Googlebot and rely heavily on clear markup to understand content structure.

Best practices:

Use <article> , <header> , <section> , <footer> , <aside> to define hierarchy Avoid burying text in generic <div> s or scripts

Label navigation and buttons with ARIA roles for accessibility and machine interpretation Provide alt text for images, which some AI systems use as context

Visible trust signals

Even with schema, visible credibility markers strengthen both user and crawler trust. Include:

- “Last updated: [date]” in article headers

- Author bios with credentials and LinkedIn links

- A clear About/Organization page with legal details, press mentions, or compliance badges

Authoritativeness & trust signals

In the AI search era, authority isn’t just a ranking factor; it’s a requirement for visibility. Traditional SEO rewarded expertise and backlinks, but LLM-powered engines take it further. Many maintain internal whitelists of trusted domains. If your brand isn’t seen as credible, your content may be skipped entirely or attributed incorrectly.

That’s why you need to actively supply trust signals across your site and digital footprint. Don’t assume AI engines will infer credibility on their own.

1. Establish author and brand authority

Every piece of content should clearly show who’s behind it. Anonymous or “admin” articles are easy to overlook.

Best practices:

- Create author profile pages with bios, headshots, credentials, and professional links Consistently attribute content to real people, not generic accounts

- Use Organization schema to define company details (name, logo, founding date, verified social profiles)

This builds entity recognition, so when AI engines see your brand or author name, they know exactly who it refers to.

2. Use named entities and evidence

AI models favor content that makes explicit references. Instead of vague phrasing, call out company names, products, and people directly.

- Mention your brand, product, and key people in the body text, not just metadata

- Reference data, studies, or third-party reports to back up claims

- Use citations and outbound links to reputable sources

Passages with evidence are easier for AI to cite confidently, increasing your odds of being surfaced in answers.

3. Strengthen external validation

Authority isn’t built only on your site. AI crawlers cross-check content against the wider web. That means your digital footprint matters.

Ways to build credibility:

- Earn high-quality backlinks from press coverage, industry reports, or research institutions

- Participate in industry roundtables, webinars, and conferences

- Secure mentions in reputable communities and publications

These signals reinforce that your brand isn’t just self-promoting, it’s recognized by others.

4. Show structured reputation markers

Finally, add visible credibility markers that are easy for both humans and crawlers to verify:

- Compliance badges (e.g., GDPR, ISO, SOC 2)

- Awards or certifications displayed on-site (and encoded in schema)

- Customer reviews and ratings where appropriate

Optimizing for the zero-click era

One of the biggest shifts in 2025 is the rise of AI Overviews (Google) and direct answer delivery (ChatGPT, Perplexity, Bing Copilot). Instead of sending users to websites, these systems increasingly surface your content inside the answer itself.

This introduces a new zero-click reality. Your content may be quoted, paraphrased, or cited directly in an AI’s response without the user ever landing on your site. For example:

A SaaS knowledge base article might resolve a query inside ChatGPT.

A fintech trend report could be cited in Perplexity as the authoritative source.

In this model, your visibility depends on whether the AI:

1. Finds your content (crawlability)

2. Understands it (structured formatting)

3. Trusts it enough to cite it (authority + schema)

That’s why your brand messaging must be embedded directly into the content. When an AI lifts a passage, your authority should travel with it.

Recent data shows why this matters:

- Around 18% of global Google searches now include AI Overviews, and in the U.S. the share is closer to 30%

- When AI Overviews appear, organic click-through rates drop sharply, in some cases falling from 7.3% to 2.6% for top-ranked results

For brands, this is both a risk and an opportunity. On one hand, you may lose traffic. On the other, your authority and brand can reach far more users if you’re cited correctly.

1. Managing Google AI Overviews visibility

Google offers a few controls for how your content appears in AI Overviews. These include:

nosnippet – blocks text from being displayed

data-nosnippet – prevents specific sections (e.g., pricing tables) from being pulled

max-snippet:[length] – sets maximum character length of excerpts

noindex – excludes a page entirely

Google-Extended – determines whether your content is used to train Google’s AI models

These let you control what AI can extract while still keeping key passages available for citation.

2. Deciding on opt-in vs. opt-out

Strategically, most companies should opt in for citation (for visibility), while selectively gating sensitive information like pricing or compliance details. Outright blocking may protect content, but it also makes your brand invisible in AI-driven search.

3. The zero-click branding imperative

If users never land on your site, your brand needs to travel inside the snippet itself. That means:

- Embedding your brand and product names naturally into fact-rich passages (e.g., “According to Omnius’ 2025 SaaS Growth Report…”)

- Using schema to encode reviews, ratings, and awards

- Displaying visible trust markers (certifications, compliance badges, partnerships)

This way, even if you don’t get the click, you win the impression.

4. Structuring for extractability

AI engines choose passages that are clear, factual, and easy to lift. To increase your chances:

- Use short, fact-dense answers (2–4 sentences)

- Provide FAQ sections at the end of articles

- Include tables, bullet lists, and definitions for scannability

- Write in a confident, declarative tone, AI prefers text that doesn’t hedge

Performance & Accessibility

AI crawlers are even less forgiving than Googlebot when it comes to performance. They’re lightweight, impatient, and far more likely to abandon a crawl if a page is slow, bloated, or

hidden behind JavaScript. In other words, a site that “works fine for users” may still be invisible to AI engines.

That’s why performance and accessibility are not just UX issues; they’re core technical requirements for GEO and AEO. If an AI crawler can’t render your content quickly and cleanly, you won’t make it into the answer pool.

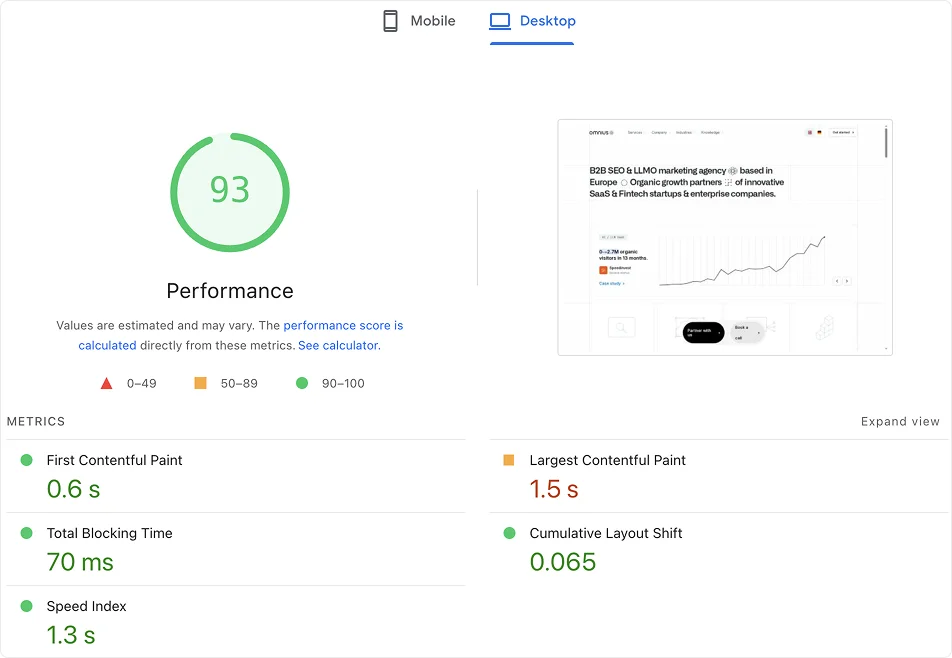

1. Speed & Core Web Vitals

Fast load times are critical. Generative crawlers don’t wait around.

Benchmarks to aim for:

- Largest Contentful Paint (LCP): < 2.5s

- First Input Delay (FID): < 100ms

- Cumulative Layout Shift (CLS): < 0.1

Minimize render-blocking JavaScript, heavy third-party scripts, and oversized images. The lighter and faster your site, the higher your odds of being parsed.

2. Mobile-first accessibility

Most AI-driven queries come from mobile contexts, so your site must be fully accessible on smaller devices.

Best practices:

- Use responsive design with adaptive breakpoints

- Avoid hidden or collapsed content that requires JavaScript triggers, AI crawlers may never see it

- Test critical pages on mobile-first crawlers to ensure nothing is blocked

3. Static & server-side rendering

Unlike Googlebot, most AI crawlers don’t fully execute JavaScript. That means client-side rendering (CSR) can leave them with a blank page.

Safer approaches include:

- Server-side rendering (SSR)

- Static site generation (SSG)

- Incremental static regeneration (ISR)

These ensure content is visible at first load, without depending on JS execution.

4. Semantic & accessible HTML

Accessibility improvements often double as crawlability improvements. Clear semantic tags help both assistive tech and AI crawlers interpret your content.

- Use <header> , <main> , <nav> , <section> , <footer> consistently

- Provide alt text for images; some AI systems parse this as additional context.

- Ensure keyboard and screen-reader accessibility, which also improves machine readability

5. Stable & reliable delivery

Finally, make sure your site is technically reliable. AI crawlers are easily discouraged by server issues.

- Use a global CDN to reduce latency

- Keep page weight light (< 2MB if possible)

- Monitor uptime and avoid recurring 5xx errors

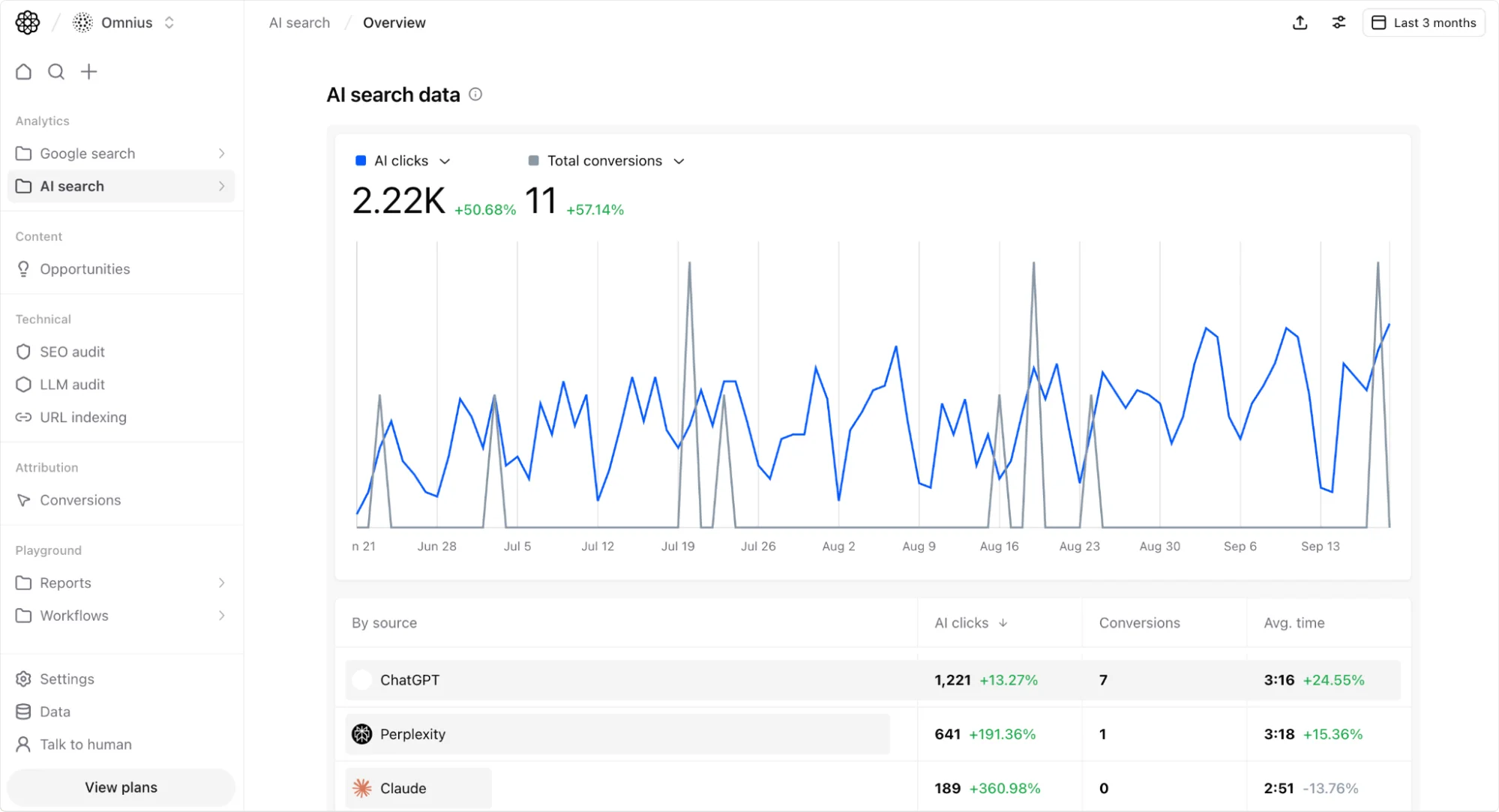

Measurement & KPIs for AI visibility

In the video below, you can learn how AI search tracking tools really work, why "AI rankings" and "share of citation" metrics are just estimates, the critical difference between synthetic vs. real user data and actionable LLM SEO metrics that drive real results.

In the traditional SEO world, success was measured with rankings, impressions, and click through rates. But in the AI-driven search era, those metrics don’t tell the full story. If an AI system answers a query directly without sending the user to your site, your analytics may show “lost traffic”, even though your brand was still present in the answer.

This creates a visibility gap. To close it, companies need to adopt AI-specific KPIs that reflect how often their content is cited, attributed, and trusted inside AI answers.

1. AI-specific visibility metrics

New metrics are emerging to track how your content appears in AI-driven results:

- Citation share – Percentage of AI answers (ChatGPT, Perplexity, Bing Copilot, Claude) that include your domain as a cited source

- Attribution accuracy – Whether your brand is cited correctly (name, URL, context)

- Topic coverage – How consistently your content surfaces for queries you want to own

- Competitive share of voice – How often competitors are cited versus your brand

Together, these metrics help you measure presence, not just clicks.

2. Crawl & access monitoring

If crawlers can’t access your content, it won’t be surfaced. That’s why log analysis is essential.

- Review server logs for requests from GPTBot, PerplexityBot, ClaudeBot, and Bing AI crawlers

- Track blocked requests, high error rates, or crawl gaps

- Set up alerts for spikes in 4xx/5xx errors from AI crawlers

This helps you spot and fix crawlability issues before they cost you visibility.

3. Engagement proxies in a zero-click world

Since clicks are declining, brands need to measure indirect engagement signals:

- Mentions in AI answers (via manual spot checks or third-party monitoring tools)

- Search volume growth for branded terms after AI exposure (implied brand lift)

- User behavior inside AI-native interfaces (e.g., follow-up questions citing your brand)

These signals show whether zero-click citations are still driving awareness.

4. Evolving reporting frameworks

Reporting must evolve from “Who’s on page one?” to “Who’s in the AI’s answer?”

Practical steps:

1. Build AI visibility scorecards that track citations, attribution, and brand presence across platforms.

2. Segment reporting by platform (Google AI Overviews vs. ChatGPT vs. Perplexity).

3. Tie AI visibility back to business outcomes, leads, brand recall, or conversions that happen downstream.

There's a need for organizational alignment

Optimizing for AI-driven search isn’t just a technical task or a content task - it’s a company wide effort. GEO / AEO require alignment across teams that don’t always work closely together. Without coordination, execution becomes fragmented, and your brand risks falling behind in AI visibility.

Here’s how different teams should own their part of the strategy:

SEO & content teams – Focus on schema, question-driven content design, and building topical authority

Developers & IT – Implement robots.txt, llms.txt, SSR/SSG, performance optimization, and error monitoring

PR & brand teams – Secure external mentions, backlinks, and third-party authority signals

Analytics & data teams – Track AI citations, monitor logs, and build AI-specific KPI dashboards

When all of these roles work together, technical GEO and AEO stop being siloed projects and become part of a unified visibility strategy.

Final takeaway

The future of online visibility won’t be decided only by who ranks on Google, but by who appears in AI answers. If AI systems can’t crawl, parse, or trust your site, your content won’t make it into the conversation, no matter how strong your traditional SEO might be.

Companies that invest now in:

- Technical crawlability and indexation

- Structured data and semantic HTML

- Modular, machine-readable content

- Authority and trust signals

- AI-specific measurement frameworks

…will build a durable edge as AI search becomes the default way people discover information.

The shift is already here. The question is whether your brand will be left behind in the zero-click era, or chosen as the answer.

At Omnius, we help SaaS and fintech brands future-proof their digital visibility with cutting-edge GEO strategies. We ensure your brand isn’t just found - it’s chosen by AI engines. Contact us.

.png)

.svg)

.svg)

.png)

.png)